Research Article: 2021 Vol: 24 Issue: 6S

Using E-Learning Factors to Predict Student Performance in the Practice of Precision Education

Abdulla Alsharhan, The British University in Dubai

Said A. Salloum, University of Sharjah

Ahmad Aburayya, Dubai Health Authority

Abstract

The emergence of Covid-19 has accelerated digital transformation, while simultaneously disrupting traditional education. The implementation of distance education practices produced a massive amount of data generated by the deployed learning management systems. Educational data mining tools using machine learning methods can produce thorough student-level insights into what has become known as precision education. This study aims to investigate a convenient approach to analyze a data set of 480 students in the Middle East using three supervised machine learning methods (artificial neural networks, decision trees, and Naïve Bayes) to predict overall performance using SPSS. The findings indicate that the naïve Bayes algorithm achieved the highest accuracy of 89.85%, while the artificial neural networks algorithm achieved the lowest variance, with a standard deviation of 2.37. Besides, there are more valuable insights beyond accuracy that other Machine learning models can provide in the SPSS environment, such as visual representation and normalized importance of independent variables. Moreover, in the context of missing student data, the data set was evaluated if e-learning parameters alone can predict student performance. The findings suggest that e-learning parameters alone can predict student performance with an average accuracy of 84.49%. This study contributes to limit grade inflation in the age of online learning due to educational malpractices.

Keywords

Educational Data Mining, Academic Performance, Prediction Model, E-Learning, Precision Education, Performance Education, Artificial Neural Network, Decision Tree, Naïve Bayes, Machine Learning, SPSS, Educational Technologies, Human-Computer Interaction

Introduction

The COVID-19 pandemic has accelerated digital transformation in a novel manner (Taryam et al., 2020; Ahmad et al., 2021; Al-Maroof et al., 2021; Taryam et al., 2021). Education is among the areas that the pandemic has heavily impacted (Capuyan et al., 2021). The learning process was disrupted, cutting off 1.5 million students from physical attendance (Kupchina, 2021). Consequently, new blended learning methods are emerging. e-learning and distance learning are becoming a normality. At the same time, the learning analytics generated from e-learning platforms can be harnessed in many ways (Salloum et al., 2017; Alshurideh et al., 2019; Al Kurdi et al., 2020). Educational Data Mining (EDM) is commonly used to search for and detect patterns to improve educational performance. The new EDM approaches that are emerging offer more personalized education in what is known as “precision education” This refers to obtaining “the right intervention in place for the right person for the right reason” (Cook, Kilgus & Burns 2018). The precision education approach was borrowed from precision medicine, and their methods are used in EDM to diagnose, predict, treat, and prevent undesirable educational behavior (Yang et al., 2021).

In order to provide the correct intervention, we need to first identify and classify specific patterns in students either in highly performing students or those who need assistance. Making performance predictions based on student grades is widely used, but little emphasis is placed on students' use of the Learning Management Systems (LMS) (Habes et al., 2019). Data extracted from LMS behavioral factors such as raising hands, posting in a discussion group, viewing an announcement, or the learning materials could be harnessed for valuable insights. However, little is known about whether if e-learning insights alone can lead to predicting performance as well. Besides, the EDM tools are usually sophisticated for educational professionals and might require coding skills.

On the other hand, some machine (ML) tools offer easy-to-use software with an easier user experience (Al Kurdi et al., 2021; Salloum et al., 2021), such as SPSS and Weka. This study aims to review some of ML methods in EDM to predict student performance and determine whether e-learning parameters alone can provide decent prediction accuracy of performance. The remainder of the paper is divided into related work, methodology used, discussion of the results achieved, and finally, the discussion and conclusion.

Related Work

Aydogdu (2020) attempted to predict 3,518 college students who actively learn through an LMS using Artificial Neural Networks (ANNs), and applied factors of gender, topic score, time spent, number of live attendance, and number of view of archived materials. They achieved an average of 80.47% accuracy and found that the number of attendance and time spent on archived materials were the most significant contributions to prediction outcomes. The work of Lemay & Doleck (2020) predicted the completion rate of Massive Open Online Course (MOOC) assignments from video viewing behavior. Finally, the research by Emirtekin, Karatay & Ki (2020) predicted online college student success rate using several machine learning methods: (naïve Bayes, logistic regression, K- Nearest Neighbor (KNN), Support Vector Machines (SVMs), Artificial Neural S Networks (ANNs) and random forest. They found that KNN achieved the highest accuracy (98.5%).

Gap Analysis

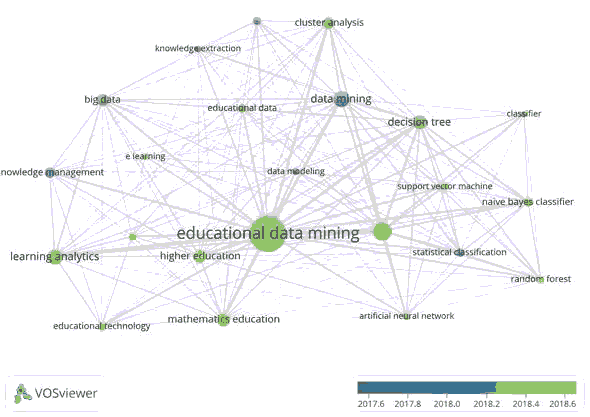

In the context of computer science, Figure 1 shows the most co- occurring keywords with the term “educational data mining”, with a minimum of 50 occurrences shown between 2016 and 2021 using VOS Viewer and Microsoft Academic. We can notice the absence of the terms "prediction model," "academic performance," and "precision education," indicating that the research remains insufficient in these areas.

Nevertheless, most attempts to predict student performance focus on achieving the highest accuracy using machine learning classifier models, but not how practical or convenient they are to use. Plenty of prediction applications targeted conveniently accessible data, such as universities implementing education management systems (e.g. Blackboard). However, these studies may be far from practical application in most cases, as only a few studies focus on lower educational levels. The review by Luan & Tsai (2021) on using ML methods for precision education mentions a gap in ML application at lower educational levels, such as the elementary and secondary student levels. Prediction methods are often sophisticated and require a professional data analyst to carry out the process, which is not available in most schools, or only available across the city educational district, and does not provide timely intervention when needed. There is also considered a gap in the immediacy of predication and intervention (Wu et al., 2021).

Regarding human-computer interaction, easy-to-use EDM tools with a Graphical User Interface (GUI) are crucial to be accessed by educational professionals in a timely manner. Tools such as SPSS and Weka can provide valuable inputs with no programming for education professionals. Moreover, in many cases, the demographic and parental data are missing, but they are essential to determine whether e-learning parameters can also predict overall student performance. This study focuses on analyzing educational data mining methods that are easily accessible to educators and teachers, thus accelerating interventions to improve student performance. Therefore, this study attempts to answer the following research questions:

RQ 01: What are some convenient ML methods to predict student performance in low educational levels?

RQ 02: Can e-learning parameters alone predict student performance?

Methodology

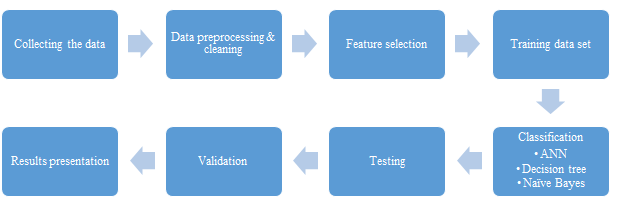

In this study, we present a model to predict student performance by testing several machine learning methods and evaluating whether distance education data alone can predict student performance. The goal of this approach is to evaluate the features that each model provides, and to find out the reasons that may have an impact on a student's academic performance. Figure 2 illustrates the main steps considered in the proposed methodology.

Data Source and Data Selection

The data set used in this research was obtained from Kaggle, a subsidiary of Google, and an online community of data scientists (Lardinois, Lynley & Mannes 2017). The data was uploaded by Amrieh, Hamtini & Aljarah (2016), where they exported it from a Learning Management System (LMS) called Kalboard 360, which is a multi-agent LMS providing synchronized access to learning resources from any online device. All data were cleaned, and all categorical values were converted to numerical data (such as nationality, place of birth, education level, and performance) using an automated recording. This study used a sample of 480 students from elementary, middle, and high school levels living in the Middle East, and their educational level ranged from the second grade to the twelfth grade.

Conceptual Model of Data Preprocessing

Educators seek to help students who have poor performance. Traditional assessment methods (such as grades) do not give indications until it is too late. Therefore, providing convenient methods that can provide early intervention is highly beneficial. In this study, performance was classified into two categories: good and poor. Behavioral features beyond demographic data were considered to identify students in need of intervention. The data used included demographic information such as nationality, gender, and place of birth, while academic data included educational stage level, semester, subjects, and the number of days of absence, in addition to the parents’ data, such as their participation in the questionnaire, level of satisfaction, and responsibility for the student (father or mother). As for the behavioral data, it included the number of participation instances in discussions, the number of times they visited educational resources, the number of times they raised their hand, and the number of times they viewed announcements. Table 1 provides a full descriptions of the measures used in the data set.

| Table 1 Descriptions of Measures of Labeling Features |

|||

|---|---|---|---|

| Item | Data type | Description | |

| Demographic information | Gender | Categorial | Student's gender (Male, female) |

| Nationality | Categorial | Student's race (Kuwait, Lebanon, Egypt, Saudi Arabia, USA, Jordan, Venezuela, Iran, Tunis, Morocco, Syria, Palestine, Iraq, Libya) | |

| Place of birth | Categorial | Students born location (Kuwait, Lebanon, Egypt, Saudi Arabia, USA, Jordan | |

| Educational information | Educational level | Ordinal | Educational stage of the student (Elementary, middle school, high school) |

| Grade level | Ordinal | The educational grade of the student (G-01, G02,…,G-12). | |

| Classroom | Categorial | The classrooms ID of the student (A, B, C) | |

| Topic | Categorial | Course topic (English, Spanish, French, Arabic, IT, Maths, Chemistry, Biology, Science, History, Quran, Geology) | |

| Semester | Ordinal | The academic term of the year (Fall, Spring) | |

| Absence Days | Categorial | Number of days the student did not attend the school (above-7, under-7) | |

| Performance | Ordinal | Student’s level of performance (Low, medium, High) | |

| Need attention | Categorial | The student with low performance and needs attention from 0 to 69 (Yes, No) | |

| E-Learning information | Raised hand | Numerical | The number of times a student raised a hand (Numeric) |

| Visited resources | Numerical | The number of times a student visited a resource ((Numeric) | |

| Viewed announcements | Numerical | The number of times a student viewed an announcement ((Numeric) | |

| Discussion groups | Numerical | The number of times a student participated in a discussion group ((Numeric) | |

| Parental information | Responsible parent | Categorial | Parent responsible for student (Father, Mother) |

| Parent Survey | Categorial | Parent Participating in the school survey (Yes, No) | |

| Parent Satisfaction | Categorial | Parent satisfaction level (good, bad) | |

Dataset

Table 1 presents the seventeen factors identified in this study and each row provides data for these factors for a particular student. The original data did not contain any empty cells, so missing values processing was not taken into account. Accordingly, all 480 rows of data were included for further analysis. The purpose of this is the early prediction of low-performing students who score from 0 to 69 and need attention. Therefore, an additional factor (#11) was added to simplify the process, and isolate only students who need attention. The new data was derived from student performance data (10) by merging the medium and high scores and giving them a value of 1 (no intervention required), leaving the low score as it is, and giving it a value 0. (intervention required).

| Table 2 Participants’ Demographics |

|||

|---|---|---|---|

| Measure | Items | Frequency | Percentage |

| Gender | Male | 175 | 36.5 |

| Female | 305 | 63.5 | |

| Stage Level | Lower level | 199 | 41.5 |

| Middle School | 248 | 51.7 | |

| High School | 33 | 6.9 | |

| Nationality | Kuwait | 179 | 37.3 |

| Jordan | 172 | 35.8 | |

| Other | 129 | 26.9 | |

| Place of Birth | Kuwait | 180 | 37.5 |

| Jordan | 176 | 36.7 | |

| Other | 124 | 25.8 | |

| Semester | Fall | 245 | 51 |

| Spring | 235 | 49 | |

| Topic | Arabic | 59 | 12.3 |

| Biology | 30 | 6.3 | |

| Chemistry | 24 | 5 | |

| English | 45 | 9.4 | |

| French | 65 | 13.5 | |

| Geology | 24 | 5 | |

| History | 19 | 4 | |

| IT | 95 | 19.8 | |

| Math | 21 | 4.4 | |

| Quran | 22 | 4.6 | |

| Science | 51 | 10.6 | |

| Spanish | 25 | 5.2 | |

| Parent relation | Father | 283 | 59 |

| Mum | 197 | 41 | |

| Parent Satisfaction | Bad | 188 | 39.2 |

| Good | 292 | 60.8 | |

| Student Performance | Low | 127 | 26.5 |

| Medium | 211 | 44 | |

| High | 142 | 29.6 | |

| Classroom | A | 283 | 59 |

| B | 167 | 34.8 | |

| C | 30 | 6.3 | |

| Absent days | Under-7 | 289 | 60.2 |

| Above-7 | 191 | 39.8 | |

Implementation of Student Performance Prediction

Several similar previous studies examined the relationships between performance and individual student characteristics using traditional statistical models, whereas statistical models are usually designed to infer relationships between variables. In comparison, machine learning models can also be used to increase predictive accuracy. When it comes to precision education decisions, recent studies suggested that supervised machine learning approaches are most commonly used to create predictive models for classification and prediction tasks. The most widely used supervised learning algorithms are support vector machines, linear regression, logistic regression, naive Bayes, and neural networks (multilayer perceptron) (Yang 2021). For the purpose of this experiment, and since the resources were limited, three machine learning techniques were used: neural networks, decision trees, and naïve Bayes.

Environment

The experiment was conducted on a Mac computer that has 16 GB 2133 Mhz LPDDR3 and a 2.8 GHz Quad-Core Intel Core i7 CPU. The premium version of IBM SPSS Statistic was used as the primary data mining tool.

Evaluation Criteria

A confusion matrix was used to describe the performance of the classifier model, where the matrix includes the true values versus the predicted values, and whether they are true or false, as shown in Table 3.

| Table 3 Confusion Matrix |

||||

|---|---|---|---|---|

| Actual | Sample | Observed | Predicted | |

| Negative | Positive | |||

| Training | Negative | True Negative (TN) | False Positive (FP) | |

| 70% | Positive | False Negative (TN) | True Positive (TP) | |

| Testing | Negative | True Negative (TN) | False Positive (FP) | |

| 30% | Positive | False Negative (TN) | True Positive (TP) | |

Results

The following are the results and the answers to the questions addressed at the beginning of this paper. After extracting the results from the three selected machine learning techniques, we analyzed the results and compared them to determine classifier is the most accurate and stable.

Artificial Neural Network

The first model was based on an Artificial Neural Network (ANN), which can be described as a simplified model of how the human brain handles data. The model learned from examining each features row, and predicted a value, made changes on the factor weight based on the number of errors, and repeated iteratively until a termination criterion was met (Stevens, Antiga & Viehmann 2020).

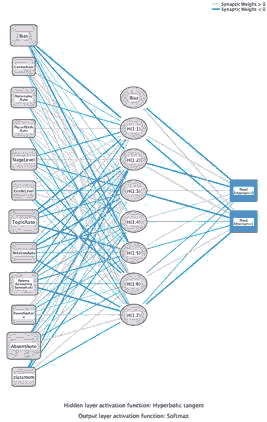

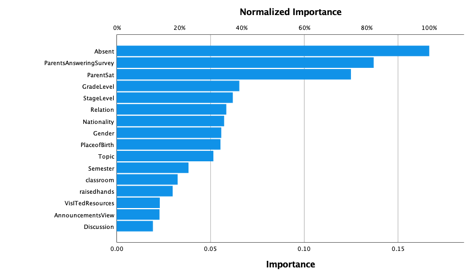

The neural network classifier was trained on 70% of the data set, and tested on the remaining 30%. Figure 3 shows how the hidden layer connects the actual factors with the independent variable. One of the advantages of using the ANN method in the SPSS environment is that it gives normalized importance for each tested factor. Figure 4 shows how each factor is impacts the classification model’s decision. Moreover, it shows that the student absent days factor has the highest impact rate on this model. Unlike the statistical approach, one of the shortfalls of machine learning approaches that they might yield different results every run, or come up with slightly different conclusions every time the model is trained. Since the training set is relatively small, it is expected to such face issues. A solution to this is to simply perform a 10-fold cross validation and consider the final accuracy obtained from the average of 10 runs, each of which uses a different test set.

After the first attempt, the model predicted students who need intervention with an accuracy of 91.7%. The testing validated these results at 84.4% overall accuracy, indicating a very good prediction rate of eight out of ten students. Table 2 shows the rest of the results of the ANN confusion matrix.

| Table 4 Neural Network Confusion Matrix |

|||||

|---|---|---|---|---|---|

| Actual | Sample | Observed | Predicted | ||

| Bad | Good | Percent Correct | |||

| Training | Bad | 74 | 16 | 82.20% | |

| Good | 11 | 225 | 95.30% | ||

| Overall Percent | 26.10% | 73.90% | 91.70% | ||

| Testing | Bad | 26 | 11 | 70.30% | |

| Good | 13 | 104 | 88.90% | ||

| Overall Percent | 25.30% | 74.70% | 84.40% | ||

Decision Tree

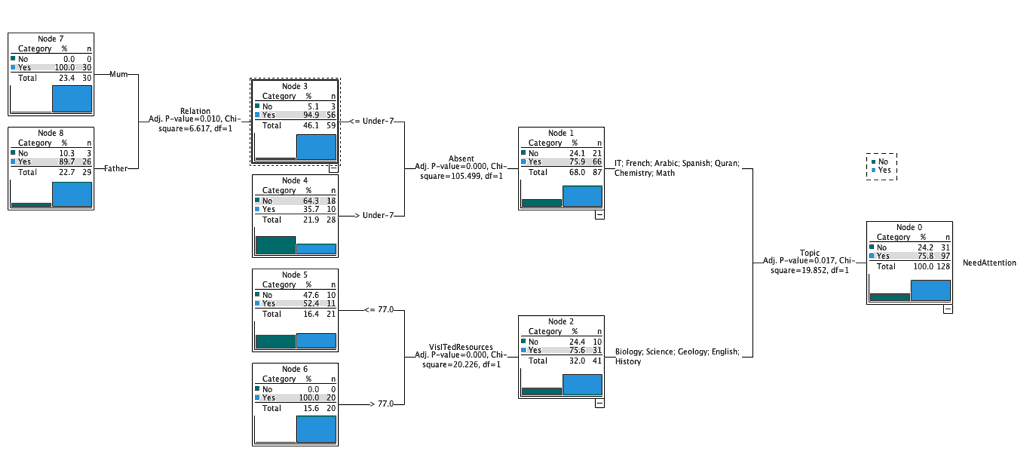

A decision tree is a decision-support tool that employs a tree-like model of decisions and potential outcomes. It makes predictions based on conditional control statements (Cleophas & Zwinderman, 2020). In an educational context, we can quickly identify whether a specific factor leads to low academic performance. By observing Figure 5, we can determine the root cause of poor student performance. For example, when we force the system to sort the decision tree based on topics, a group of topics (Biology, Science, Geology, English and History) is classified as one of the topics that need a different sort of intervention (visiting learning resources) to avoid poor performance, while the second group of topics (IT, French, Arabic, Spanish, Quran, Chemistry and Maths) might just need to attend live class sessions to avoid poor performance.

After feeding the decision tree model with all the factors, and train it on 70% of the results, we have obtained an overall performance of 89.1% on the trained data set, and up to 88.2% on the test set, which indicates a decent accurecy based on this type of data.

| Table 5 Decision Tree Confusion Matrix |

|||||

|---|---|---|---|---|---|

| Actual | Sample | Observed | Predicted | ||

| Bad | Good | Percent Correct | |||

| Training | Bad | 60 | 28 | 68.20% | |

| Good | 6 | 217 | 97.30% | ||

| Overall Percentage | 21.20% | 78.80% | 89.10% | ||

| Test | Bad | 24 | 15 | 61.50% | |

| Good | 5 | 125 | 96.20% | ||

| Overall Percentage | 17.20% | 82.80% | 88.20% | ||

Naïve Bayes

Naive Bayes is a simple learning method that employs the Bayes rule, together with a strong assumption that the attributes are conditionally independent from one another. Even though this independence requirement is frequently broken in practice, naive Bayes typically produces competitive classification accuracy (Webb et al., 2011). Similar to the previous model, we have trained the Naïve Bayes model on the 70% of the data and tested on the remaining part of the data set for validation. The first attempt achieved 89% accuracy. The validation tested results achieved an even higher accuracy of 91.6%, which is the highest result we obtained from the first attempt from among the three ML techniques.

| Table 6 Naïve Bayes Confusion Matri |

|||||

|---|---|---|---|---|---|

| Actual | Sample | Observed | Predicted | ||

| Bad | Good | Percent Correct | |||

| Training | Bad | 66 | 12 | 84.60% | |

| Good | 22 | 226 | 91.10% | ||

| Overall Percent | 27.00% | 73.00% | 89.60% | ||

| Test | Bad | 40 | 9 | 81.60% | |

| Good | 4 | 101 | 96.20% | ||

| Overall Percent | 28.60% | 71.40% | 91.60% | ||

E-Learning Parameters vs. All Parameters

The second objective of this study is to determine whether e-learning parameters alone (number of raised hands, visited resources, viewing announcements, discussion groups) can identify students who need intervention.

The first attempt from running the model on the training set resulted in 84.7% accuracy. The testing sample also achieved a similar result of 84.4% accuracy. This indicates that the model can predict more than eight out of ten students who likely need attention based on e-learning parameters only extracted from LMS.

| Table 7 Naïve Bayes Confusion Matrix (E-Learning Only) |

|||||

|---|---|---|---|---|---|

| Actual | Sample | Observed | Predicted | ||

| No | Yes | Percent Correct | |||

| Training | No | 57 | 27 | 67.90% | |

| Yes | 23 | 219 | 90.50% | ||

| Overall Percent | 24.50% | 75.50% | 84.70% | ||

| Test | No | 27 | 16 | 62.80% | |

| Yes | 8 | 103 | 92.80% | ||

| Overall Percent | 22.70% | 77.30% | 84.40% | ||

Since the accuracy achieved by the prediction model based on distance learning parameters is close to the results achieved using all factors (i.e., demographic, educational, and parental parameters), we determine if this difference is significant. Therefore, the following null hypothesis was suggested:

H1 There is a significant difference between using e-learning parameters only vs. using all parameters to predict students' potential failure.

Since naïve Bayes achieved the highest accuracy rate, we have run this experiment using this model by applying the e-learning parameters only. The test was conducted ten times., once with ith the e-learning features only, and once with all the available factors. Table 8 shows the statistical description of the accuracy results of from both. After comparing both means using an independent sample T-test, we have noticed that the P-value<0.001 is less than the value of α=0.05. Therefore, we can reject the null hypothesis and support the alternative H1, suggesting the difference is significant between prediction using e-learning parameters alone and all the parameters together.

| Table 8 Naïve Bayes Accuracy Rate |

|||||

|---|---|---|---|---|---|

| N | Minimum | Maximum | Mean | Std. Deviation | |

| E-learning only | 10 | 77% | 91.10% | 84.49% | 4.63236 |

| All factors | 10 | 86% | 98% | 89.85% | 3.318 |

Nevertheless, the accuracy achieved with e-learning features alone is still above 80%, which is still decent with limited e-learning resources. The contribution of this finding can allow instructors with limited knowledge about students to predict at least 80% of the low-performing students, using the e-learning parameters only. One of the critical applications of this finding is to detect cheating in final exams. For example, suppose a student's e-learning parameter classified him or her as a low-performing student, while the actual exam result shows a high-performance score. In this case, a flag will be raised on that student and this might need a special kind of attention, e.g. the student can demonstrate how the results were obtained.

Validation

One of the challenges faced is that the machine learning results differed from one attempt to the next, even if the training was on the same data. This may be due to the relatively small data size, as machine learning models tend to show higher accuracy with more training data. Therefore, to stabilize the accuracy results, the experiment was repeated ten times for each model. Table 9 shows a summary of the results collected from the three machine learning techniques employed. Although the mean of the naïve Bayes technique yields a higher accuracy rate than the other two models (89.85%), the ANN showed the most stable average with the lowest standard deviation (2.366), indicating that the ANN algorithm is the least sensitive to the data used during training (low variance).

Although the results are in line with the findings by Amrieh, Hamtini & Aljarah (2016), this experiment provided a higher accuracy rate (89.85%) compared to their proposed ensemble method (above 80%) on the essentially same data set. This means that it is possible to achieve higher accuracy by applying one machine learning technique only in a GUI tool such as SPSS compared to Weka. The contribution of this finding provides a convenient method, that can be easily performed by educational professionals and data analysts for an immediate and swift result that allows timely intervention.

| Table 9 Validating Ml Methods Accuracy Results |

|||||

|---|---|---|---|---|---|

| N | Minimum | Maximum | Mean | Std. Deviation | |

| Neural Network | 10 | 84 | 92 | 87.48 | 2.366 |

| Decision Tree | 10 | 81 | 93 | 85.2 | 3.897 |

| Naïve Bayes | 10 | 86 | 98 | 89.85 | 3.318 |

Discussion

We have observed how naïve Bayes predicted students' performance with up to 98% accuracy. However, other ML techniques were able to provide additional insights that the Naïve Bayes method could not. For example, the decision tree method provides a visual representation that is easy to understand and interpret. One of the insights that can be learned from applying the decision tree method on this data set is to sort the type of intervention based on topic. The decision tree algorithm has it is own cons as well. Suppose we have two two decision trees with the same accuracy. In this case, the simpler one should be chosen, as large-sized decision trees tend to overfit training data achieving high accuracy for the training data, but produce poor accuracy for test data or real-world data (Paek, Oh & Lee, 2006). The ANN method is considered a black box, as we cannot tell how much each independent variable impacts the dependent variables. However, using the ANN method in the SPSS environment has its own advantage. We were able to determine the degree of importance of each factor on ANN classification. Some of the disadvantages of ANN are that it highly depends on the training data, which might result in over-fitting and generalization (Ciaburro & Venkateswaran, 2017). Besides, the results might vary every time the test is conducted, but it will tend to stabilize and provide better results if the training data are large and variant enough.

In the context of e-learning, we have noticed how using LMS parameters alone can predict decent performance with 90% accuracy, which is reliable when the data about the students are limited. Application of this model can go beyond simply expecting low-performing students to detect 80% of grade inflation: phenomena where students are achieving higher grades than they deserve (Arenson, 2004). Since the pandemic, schools and academic institutions are conducting online examinations, and many of them are reporting students who are taking advantage of the system to achieve higher grades than they deserve (Kulkarni, 2020). Using this model will allow comparing low-performance students with their actual achieved grades and detect any high variance among them. Although our experiment with e-learning parameters on the given data set obtained a good prediction rate, it might not always be the case, especially at for lower educational levels. For example, many raised hands or microphone uses might just be noises and has nothing to do with performance, such as "teacher, can I go to the toilet?" These cases of noise may vary or decrease as the educational level increases. Good data analysts can, understanding the context of the collected data and unveiling the hidden connections inside the data forest.

It should also be noted that the data of e-learning behaviors were extracted using LMS. Many schools in the lower grades still rely on virtual meeting software such as Microsoft Teams or Zoom, and have not yet implemented LMS solutions. There is little known if Teams or Zoom are offering APIs that allow the extraction of e-learning parameters from the software. Education professionals might want to expedite the implementation of LMS solutions such as Blackboard or the open-source LMS system Moodle to obtain useful insights about student e-learning behaviors.

Conclusion

In summary, we have presented a model to predict student performance and those who need intervention by testing several machine learning methods on a data set of 480 students. Moreover, we evaluated whether the parameters taken from e-learning data alone can predict student performance. The goal of this approach was to evaluate and understand the features of each ML model. We have identified the most important reasons that may impact students' academic performance and prove that e-learning parameters alone are sufficient to predict performance independently. Educators can benefit from detecting performance variance between predicted and actual results in many ways, especially in educational malpractices that result in grade inflation. The study was limited by time, resources, and the low number of data points for the training set. The study also used preprocessed data due to limited access to educational data. In future work, can we aim to examine extracting the data set from either LMS or common meeting applications that are used in the e-learning environments, such as Microsoft Teams or Zoom? Validation was also limited to accuracy testing only; future work can apply different measures to evaluate the classification quality, such as Precision, Recall, and F-Measure. Machine learning models might not consistently deliver the expected results. The aim is to utilize the best available tools for the student's best interest, and for the right reasons.

References

- Ahmad, A., Alshurideh, M., Al Kurdi, B., Aburayya, A., & Hamadneh, S. (2021). Digital transformation metrics: A conceptual view. Journal of management Information and Decision Sciences, 24(7), 1-18.

- Al Kurdi, B., Alshurideh, M., & Salloum, S.A. (2020). Investigating a theoretical framework for e-learning technology acceptance. International Journal of Electrical and Computer Engineering (IJECE), 10(6), 6484–6496.

- Al Kurdi, B., Alsurideh, M., Nuseir, M., Aburayya, A., & Salloum, S.A. (2021). The effects of subjective norm on the intention to use social media networks: An exploratory study using PLS-SME and machine learning approach. Advanced Machine Learning Technologies and Applications, 324-334. Springer.

- Alaali, N., Al Marzouqi, A., Albaqaeen, A., Dahabreh, F., Alshurideh, M., … & Aburayya, A. (2021). The impact of adopting corporate governance strategic performance in the tourism sector: A case study in the kingdom of Bahrain. Journal of Legal, Ethical and Regulatory Issues, 24(1), 1–18.

- Alghizzawi, M., Ghani, M.A., Som, A.P.M., Ahmad, M.F., Amin, A., Bakar, N.A., …& Habes, M. (2018). The impact of smartphone adoption on marketing therapeutic tourist sites in Jordan. International Journal of Engineering & Technology, 7(4.34), 91–96.

- Al-Khayyal, A., Alshurideh, M., Al Kurdi, B., & Aburayya, A. (2020). The impact of electronic service quality dimensions on customers’ e-shopping and e-loyalty via the impact of e-satisfaction and e-trust: A qualitative approach. International Journal of Innovation, Creativity and Change, 14(9), 257-281.

- Al-Maroof, R.S., Alhumaid, K., Alhamad, A.Q., Aburayya, A., & Salloum, S. (2021a). User acceptance of smart watch for medical purposes: an empirical study. Future Internet, 13(5), 127.

- Al-Maroof, R., Ayoubi, K., Alhumaid, K., Aburayya, A., Alshurideh, M., Alfaisal, R., & Salloum, S. (2021b). The acceptance of social media video for knowledge acquisition, sharing and application: A com-parative study among YouTube users and TikTok Users’ for medical purposes. International Journal of Data and Network Science, 5(3), 197–214.

- Alshurideh, M. (2018). Pharmaceutical promotion tools effect on physician’s adoption of medicine prescribing: Evidence from Jordan. Modern Applied Science, 12(11).

- Alshurideh, M., Al Kurdi, B., & Salloum S.A. (2020). Examining the main mobile learning system drivers’ effects: A mix empirical examination of both the Expectation-Confirmation Model (ECM) and the Technology Acceptance Model (TAM). In: Hassanien A., Shaalan K., Tolba M. (eds) Proceedings of the International Conference on Advanced Intelligent Systems and Informatics 2019.

- AlSuwaidi, S.R., Alshurideh, M., Al Kurdi, B., & Aburayya, A. (2021). The main catalysts for collaborative R&D projects in Dubai industrial sector. In the International Conference on Artificial Intelligence and Computer Vision (pp. 795–806).

- Amrieh, E.A., Hamtini, T., & Aljarah, I. (2016). Mining educational data to predict student's academic performance using ensemble methods. International Journal of Database Theory and Application, 9(8), 119–136.

- Arenson, K.W. (2004). Is it grading inflation, or are students just smarter? The New York Times.

- Aydogdu, S. (2020). Predicting student final performance using artificial neural networks in online learning environments. Education and Information Technologies, 25(3), 1913–1927.

- Capuyan, D.L., Capuno, R.G., Suson, R., Malabago, N.K., Ermac, E.A., Demetrio, R.A.M., … & Medio, G.J. (2021). Adaptation of innovative edge banding trimmer for technology instruction: A university case. World Journal on Educational Technology, 13(1), 31–41.

- Ciaburro, G., & Venkateswaran, B. (2017). Neural Networks with R. Packt.

- Cleophas, T.J., & Zwinderman, A.H. (2020). Machine learning in medicine –A complete overview. Springer.

- Cook, C.R., Kilgus, S.P., & Burns, M.K. (2018). Advancing the science and practice of precision education to enhance student outcomes. Journal of School Psychology, 66, 4–10.

- Emirtekin, E., Karatay, M., & Ki, T. (2020). Online course success prediction of students with machine learning methods. Journal of Modern Technology and Engineering.

- Habes, M., Salloum, S.A., Alghizzawi, M., & Mhamdi, C. (2020). The relation between social media and students’ academic performance in Jordan: YouTube perspective. Advances in Intelligent Systems and Computing, 1058.

- Kulkarni, T. (2020). Students caught indulging in malpractices during online exams. The Hindu.

- Kupchina, E. (2021). Distance education during the covid-19 pandemic. Proceedings of INTCESS 2021 8th International Conference on Education and Education of Social Sciences.

- Lardinois, F., Lynley, M., & Mannes, J. (2017). Google is acquiring data science community Kaggle. Techcrunch.

- Lemay, D.J. & Doleck, T. (2020). Predicting completion of Massive Open Online Course (MOOC) assignments from video viewing behavior. Interactive Learning Environments. Routledge.

- Luan, H., & Tsai, C.C. (2021). A review of using machine learning approaches for precision education. Educational Technology & Society, 24(1), 1176–3647.

- Mouzaek, E., Al Marzouqi, A., Alaali, N., Salloum, S.A., Aburayya, A., & Suson, R. (2021). An empirical investigation of the impact of service quality dimensions on guests satisfaction: A case study of Dubai Hotels. Journal of Contemporary Issues in Business and Government, 27(3), 1186–1199.

- Paek, S.H., Oh, Y.K., & Lee, D.H. (2006). ‘SIDMG: small-size intrusion detection model generation of complimenting decision tree classification algorithm’. In international workshop on information security applications, Berlin, 83–99.

- Salloum, S.A., Al-Emran, M., Abdallah, S., & Shaalan, K. (2017). Analyzing the Arab gulf newspapers using text mining techniques. In International Conference on Advanced Intelligent Systems and Informatics, 396–405.

- Salloum, S.A., Al Ahbabi, N., Habes, M., Aburayya, A., & Akour, I. (2021). Predicting the intention to use social media sites: A hybrid SME- machine learning approach. Advanced Machine Learning Technologies and Applications, 324-334.

- Stevens, E., Antiga, L., & Viehmann, T. (2020). Deep Learning with PyTorch. Manning.

- Taryam, M., Alawadhi, D., Aburayya, A., Albaqa'een, A., Alfarsi, A., … & Salloum, S.A. (2020). Effectiveness of not quarantining passengers after having a negative COVID-19 PCR test at arrival to Dubai airports. Systematic Reviews in Pharmacy, 11(11), 1384-1395.

- Taryam, M., Alawadhi, D., Al Marzouqi, A., Aburayya, A., Albaqa'een, A., … & Alaali, N. (2021). The impact of the covid-19 pandemic on the mental health status of healthcare providers in the primary health care sector in Dubai. Linguistica Antverpiensia, 21(2), 2995-3015.

- Webb, G.I., Keogh, E., Miikkulainen, R., Miikkulainen, R., & Sebag, M. (2011). ‘Naïve bayes’. In Encyclopedia of Machine Learning, 713–714.

- Wu, J., Yang, C., Liao, C., & Society, M.N.E.T. (2021). Analytics 2.0 for Precision Education. Educational Technology & Society, 24(1), 267–279.

- Yang, A., Chen, I., & Flanagan, B. (2021). From human grading to machine grading. Educational Technology & Society, 24(1), 164–175.

- Yang, T.C. (2021). Using an institutional research perspective to predict undergraduate students career decisions in the practice of precision education. Educational Technology & Society, 24(1), 280–296.