Research Article: 2021 Vol: 20 Issue: 6S

The Challenges of Reflective Practice in Shaping Higher Education Academic Managers Perceptions of Critical Self-Evaluation Reports in Quality Enhancement

Laurie Brown, Abu Dhabi School of Management

Abstract

This research is set in a specialised graduate business school in the United Arab Emirates. It deciphers the reflective journey that academic managers deploy to complete a Critical Self-Evaluation Report (CSERs). The theoretic lens of reflective practice is used to magnify nuances and meaning.

This study of the challenges of reflective practice that influence academic managers’ perceptions of the benefits and barriers to using CSERs, was conducted using semi- structured, recorded interviews of three program directors. These were transcribed using Otter.ai software, and their accuracy confirmed with participants. Thematic analysis of the transcripts were codes identifying four enablers and three barriers to the process. The study is limited to a single institution at a very early stage in the use of CSERs and reflective practice may not be a long-term characteristic of the CSER’s enactment.

In agreement with the literature, findings show a dependency on post delivery reflection-on- action. The participants’ reflective journey is aligned with models of reflection available in the literature. However, participants identify that migrating their practice to an on-program continuous reflection-in-action method may capture information more readily, improving the accuracy of their CSERs and increasing impact. In addition, participants’ practices ebb and flowed between autonomous self-reflective practice; able to complete program self- evaluation independently, and collaborative communicative reflexivity; dependent upon shared reflection drawn from a range of stakeholders.

The main conclusions are that end-point reflection-on-action informs academic managers’ positive perceptions of the CSER instrument. Participants do not make use of reflection-in- action to contemporaneously capture quality evaluations throughout the year. In addition, to illuminate problem-solving insights, faculty need to nurture and cultivate their skills of deep reflection to increase the value of the CSERs. Academic managers’ reflective practices align with recently developed classification schemas and established modes of reflection available in the literature, demonstrating the suitability of reflective modes of analysis to the university sector. Furthermore, the study shows application beyond the bounds of this university’s improvement tool demonstrating how instruments can be evaluated and improved.

This study elucidates the reflective journey that academic managers take to inform their perceptions of the CSER. Additionally, it identifies the benefits and barriers to its effective implementation in quality enhancement practices.

Future research might usefully extend the study to multiple higher education institutions, to examine the degree to which findings can be generalised. Additionally, the constellation of approaches to reflection would benefit from harmonisation into a unifying theory.

Keywords:

Quality Enhancement, CSER, Academic Managers.

Introduction

This paper presents an analysis of academic managers’ reflective practices in their approaches to complete a self-evaluation instrument. This phenomenological study is based on newly introduced Critical Self Evaluation Reports (CSERs), scrutinised through the theoretical lens of reflective practice available in the literature. It attempts to determine common meaning by inquiring into the lived experiences’ of individuals (Creswell, 2013).

To successfully complete CSERs, academic managers need to reflect upon their own experiences and those of other stakeholders. They analyse a range of metrics and evaluate the findings. However, if reflection does not lead to meaningful insights, their value in problem-solving is limited (Trowler et al., 2020). In addition, managers’ reflection skills may be influenced by their past experience/skills of reflection and their overall perceptions, including the challenges of the newly introduced CSER process itself. First, they need to address the ‘critical’ component. CSERs require a deeper level of discernment than that previously utilized in program evaluations. Academic managers are required to evaluate program outcomes and key performance indicators. These are coded into strengths, weakness and an associated time-bound improvement action plan that is used to remediate the weaknesses. In addition, critical self-evaluation requires accessing international benchmarks and completing a comparative analysis. Second, barriers to the changing expectations of practice exist. CSERs require a transition away from the previous tick-box system. This was used to confirm whether or not certain aspects of course files were present, or absent. Beyond this, they contributed little to quality enhancement. As a result, academic managers’ workload has changed; migrating from a simple tick-box taking a few minutes to complete, to a comprehensive program self- evaluation taking several hours to complete.

Reflective practices, are central in helping to decipher differences in the multi-causal nature of phenomena of academic managers’ perceptions. In addition, reflection is important in enabling practitioners to effectively deploy the new CSERs as an evaluation and enhancement tool. For example, by contemplating on insights arising, that may lead to problem-solving solutions (Trowler et al., 2020). Such perceptions may themselves, be understood by different models of reflection. These include Schon’s (1987) reflective practitioner model, Trowler"s (2020) classification schema, and Archer’s modes of reflexivity (2007).

The four research questions used to navigate this inquiry, are documented in Table 1 below. They provide a focus to decipher understanding of the role of reflection in the formation of academic managers’ perceptions.

| Table 1 Research Questions |

|

|---|---|

| RQ1 | What do program directors perceive to be the value of the newly introduced critical self-evaluation reports? |

| RQ2 | What different reflective practice categories can be identified from the participants’ responses? |

| RQ3 | How well can participants’ reflections be understood through careful analysis of reflective practice models? |

| RQ4 | What are the purpose and value of participants’ responses to RQ1 and RQ2 for enhancing the usefulness of the reflective aspects of the CSER tool? |

In 2020, the UAE Ministry of Education (MoE), implemented a Framework for the Compliance Inspection of Higher Education Institutions (Compliance Framework). This introduced Critical Self-Evaluation Reports’ (CSERs) as a vehicle to memorialize a higher education institution’s (HEI’s) strengths and weaknesses (p57). The Compliance Framework’s Requirement for the use CSERs represents a policy mandate that lacks practice-oriented expression. CSERs represent a change in both policy and nomenclature.

The Compliance Framework supplements the work of the accreditation agency and de facto regulator, the Commission for Academic Accreditation (CAA). The major difference is its focus on the UAE’s laws and regulations, rather than on an evaluation of the quality of learning programs (MoE, 2020).

The CAA Standards for Accreditation and Licensure (‘The Standards’ CAA 2019), govern an HEI’s operations, including institute licensure and program accreditations. The Standards (and embedded Stipulations) make reference to a diverse range of potential synonyms for CSERs. These include: self-studies (p2), self-critical (p9), critically reflective (p12), and analytical self-study (p12). Re-licensure and program re- accreditation document narratives mandate the use of self-studies (p12-13). However, this re-licensure and accreditation language is omitted from the Standards themselves, where program evaluation and effectiveness are used (p38, 39).

Neither the CAA, or the MoE provide exemplar CSERs tools. As a result, each HEI must decipher the regulations and construct their own tools. This aligns with expectations for HEIs to develop their own internal evaluation system as a preceding step to an external evaluation (Kovac, 2012). Indeed, the CAA’s external evaluation of the HEI’s are based on an HEI’s self-studies (CAA, 2019), annual reports (p300), and their impact in improvement planning (p18, 38). This aligns with Macfarlane & Gourlay’s, ‘court of law’ process (p455 2009). Course and program evaluations are linked to obtaining stakeholder views and methods of teaching and learning. These are captured within course file documentation that the CAA’s reviewers use to evaluate learning outcomes.

The specification for Course Files (CAA 2019, Annex 16, p104) highlight eight themes that require a quality evaluation. This includes assessment; course learning outcome; students’ performance outcomes; and potential improvement measures.

The Ministry of Education’s (MoE’s) 2020 Compliance Framework, mandates that academic CSERs should include: strengths and weaknesses (p57); graduate employment rates; and Scopus publication outputs, (p72). Other aspects broadly mirror the CAA’s requirements. Consequently, a definitive list of CSER components is not prescribed and it is up to each HEI to interpret how best to conduct a CSER as it relates to their specific context, course and program evaluations.

Scrutiny of how national systems impact HEIs are common (Baartman et al., 2013). Recent examples include: Vietnam (Pham, 2018), Norway (Mårtensson et al., 2014), & China (Zou et al., 2012).

The research was conducted in a specialist graduate only, business school within the United Arab Emirates (UAE). It presents an analysis of academic managers’ reflective practices deployed to complete a program self-evaluation tool. All of the sampled academic managers are program directors of master’s level programs. Academic managers took an holistic approach to interpret the required constituent parts of the CSER. As a result, a 26 metrics CSER was developed by using existing metrics, already collected for internal or external reporting purposes, together with additional metrics cascading from both the Standards and the organisation’s Strategic Plan. The first programs’ CSERs were completed in April 2020. Subsequently, CSERs were completed for all programs at the end of the 2019/20 academic year (AY).

This phenomenological research is scrutinized through the theoretical lens of reflective practice. The focus is to analyse the value of reflective practice in shaping academic managers’ perceptions, when viewed through the theoretical reflective lens of Schon’s (1983) foundational work and Trowler’s et als’., (2020) classification schema. In addition, Archer’s (2007) modes of reflexivity, provides further classification of participants’ reflective practices used to complete program CSERs.

The method deploys semi-structured interviews with all program directors (participants). Transcribed interviews were then analysed and coded to draw out themes and meanings. This approach is grounded in the literature. For example, studies on the perceptions of six science teachers in South Africa (Moodley & Gaigher, 2017), and the interpretive phenomenological study of three mathematics teachers’ perceptions (Russo & Hopkins, 2019).

Literature Review

The philosopher Descartes’ statement “I think, therefore I am” memorialises the concept of an internal conversation that has become the foundation of both reflective and reflexivity practice. Over 300 years later, Schon theorised the relationship between professional practice standards and the role of reflection in informing improvements (Schon, 1983). This is synonymous with Archer’s (2007) self-talk and constellation of concerns (p64) about oneself and the surrounding environment.

Reflective practice has become a social scientist playground, spawning a wide variety of ideas, and nomenclature. There is no common agreement on reflective practice and its outcome in terms of characteristics and classification nomenclature (Holland, 2016).

The importance of self-regulation in performance improvements is well documented. Mårtenssons, et al., (2014) work links to that of Archer (2007), in identifying the important role that self-monitoring plays in developing high performance teams. In addition, Mårtensson, et al., (2014), rationalise that the conjunction of quality assurance requirements and actual practice, is symptomatic of an organisation’s structure and aims. However, their study is limited by a narrow definition of internal quality assurance as merely evaluations of teaching and learning activities. They note academic skepticism and the perceived separation of quality assurance from academic life. They promote the idea, that while quality assurance requirements are expressed through policy documents, it is daily practice that develops meaning, and they recognise that reflective practice can bring meaningful change.

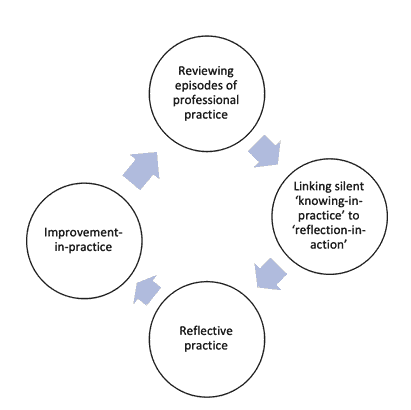

Schon distinguishes individual reflection based on examining one’s own experience, from community reflexive interaction that considers how others view us. Schon identifies reflection-on-action (intuitive awareness) enabling practitioners to improve through a cycle of reflection, and feedback on other peoples’ reactions. He links the silent knowing- in-practice (understanding the specific job) to reflection-in-action ‘thinking on one’s feet’ (live self-critiquing) enabling reflective practice to generate improvements. This aligns with Archer’s (2007) second role of reflexivity, being preparedness to develop ad hoc solutions. Schon (1983) identifies a chain reaction that may be summarised as: reviewing episodes of professional practice; linking silent ‘knowing-in-practice’ to ‘reflection-in-action’; leading to ‘reflective practice’; and enabling improvement-in- practice.

There are too many competing styles and metaphors for reflective practices. Holland (2016), promotes emergent reflexivity as an umbrella term to describe the diversity of terms loose in the subject disciplines. Holland (2016) identifies four progressive levels of reflexivity rising from self-contained (1), to trans-disciplinary (4) community census. By comparison, Archer (2007), has a different reflexivity classification system (communicative, autonomous, meta and fractured). Similarly, Farrell, et al., (2019) studied reflection in TESOL teachers, leading to a framework that embraces spiritual, moral and emotional ingredients. They concluded three dynamically related themes: approachability; art-oriented teacher; and curiosity. However, his study is limited to a one- person case study.

Likewise, Greenburg’s (2020) work, on how faculty capture their reflections on scholarly activities, uses Deweys five phases of reflective thinking (including: suggestion, intellectualization, hypothesis, reasoning, and testing the hypothesis by action). This further demonstrates the wide and fragmented field of practice. Greenberger (2020) reports that faculty reflection has a positive impact on student learning, and that self-awareness, arising from reflection, can identify strengths and acceptance of the need for change. He states: “Improved decision-making can help faculty make more effective choices about teaching strategies, curriculum options, and conducting research.”

Archer (2007), uses reflexivity to explain internal dialogue that shapes an individual"s response to the world around them and that reflexivity is a changeable property informed by ones concerns and context (p145). Archer (2007) states two primary roles for reflexivity.

First, participants have to recognise themselves in the process. Second, and in alignment with reflection-in-action (Schon, 1983), to develop ad hoc solutions to overcome emergent challenges. Consequently, Archer’s “mode of reflexivity” may help to understand participants’ perceptions of how CSERs identify improvement actions. However, barriers to reflection-led enhancement include: ‘denial’ (Macfarlane & Gourlay, 2009) and time, motivation and the participants expertise at reflection (Greenberger, 2020).

Schmutz & Eppich, (2019), exemplify team reflexivity, when a medical trauma team discuss an earlier case, or jointly discuss past performance, to adapt and inform future trauma practices. They identify three stages to effective reflexivity: retrospective summary of progress; evaluating information on successes and failure; forward planning improvements based on outcomes from stage (1) and (2). Academic managers’ approaches used to enact their CSERs mirrors Schmutz & Eppich’s, (2019) method in its evaluation of strengths and weaknesses of past performance across the AY, combined with developing a time bound improvement action plan (Archer, 2007). However, the degree of professional dialogue is not extensive as participants are self-critical of past performance. Thus, more reflective than reflexivity.

Trowler, et al., (2020) categorised reflection strategies into a schema by analysing students’ reflective writings; first, as either mostly reflective or descriptive and second, into ‘selfie’, ‘quick-fix’, ‘rumination’ or ‘action’ quadrants. This reflects Holland’s (2016) definitions discussed above. However, Macfarlane & Gourlay (2009), question the validity of such reflective assignment methods.

Trowler, et al., (2020) views reflection as dichotomous paths; either self-focused, or problem- solving (Table 2). Whereby, self-focused internal reflection develops understanding and external reflection reveals insights that are essential to effective problem-solving. As a result, their helpful classification schema is a useful comparator in this study.

| Table 2 Classification for Reflective Practice |

||

|---|---|---|

| Internal self-focused reflection | External problem-solving reflections that may lead to insights | |

| Primarily reflective | Rumination (deep inward focused self-critique, less on problem-solving) | Action (deep problem-solving reflection, empowerment/agency for change) penitent performance induction |

| Primarily descriptive | Selfie (self-focused, descriptive and less reflection than rumination) | Quick-fix (superficial focuson problem- solving, more descriptive and less reflection than ‘action’) |

| Trowler, et al., Reflective practice classification schema | ||

Trowler, et al., (2020) also point out a danger that rumination may lead to anxiety and depression caused by regurgitating issues without obtaining problem-solving insights. Nevertheless, many aspects have application in this study, especially their classification schema.

Research Methodology Research Strategy

This qualitative study is based upon semi-structured interviews with three program directors: two associate professors and one full professor. In addition, I reviewed UAE policy documentation. The participants drew upon their experiences of CSERs since their first use at ADSM in 2020. This research method has been used effectively in other studies including into university managers perceptions of quality assurance (Pham, 2018), and perceptions of implementing web learning in the UK (Matthews, 2008; Mårtensson. et al., (2014).

In comparison, Watts et al., (2019) used Survey Monkey and Qualtrics to conduct large scale surveys of faculty members’ perceptions. However, although the sample sufficiency would be still be assured (100% of program managers) actual participants is low (n=3), rendering these methods impractical, because quantitative data analysis would not be meaningful for the small number of participants. However, two aspects from Watts et al (2019) have been integrated into the semi-structured interview; these being the notion of value and time spent.

Nevertheless, studies of this small size are not uncommon. For example:

• three elementary teachers’perceptions of teaching challenging mathematics topics (Russo & Hopkins (2019)

• seven teachers’ perceptions of the usefulness of learning analytics data (Van Leeuwen (2019)

• six grade 9 science teachers’perceptions using questionnaire and semi-structured interview (Moodley et al., 2017).

Data Collection Technique

Before commencing this research, I obtained ethical approval from each participant to engage in the study. The sample is of three academic mangers that oversee Master’s programs. This is a census that represents all the academic managers in this specialised graduate business school in the UAE. The sample includes the program directors for the:

• Master of Business Administration

• Master of Science in Leadership & Organizational Development

• Master of Quality & Business Excellence / Business Analytics.

Semi-structured interviews were used to address the research questions and gather nuanced perceptions from academic managers on the challenges and benefits encountered in using the CSER tool effectively. The interview questions provided a reservoir of participants’ reflective habits that could be compared with the literature and through the theoretical lens of reflection discussed above. This enabled deciphering the purpose and value of the reflective categories revealed, in enhancing quality.

To assure the reliability and validity of the interview questions the researcher, conducted a pilot interview, with an internally promoted ex-program director. Pilot interviews are recognised as beneficial to assure the 'construct and content’ validity and reliability of the research (Dikko, 2016). As a result, to improve the interviews’ reliability overlapping questions were deleted. In addition, interview questions were realigned against research questions. As in other studies (e.g., Cameron & Mackeigan, 2012), the vast majority of questions were designed as qualitative open questions used to assess the factors influencing practices. A very few, were closed-ended quantitative questions used to specifically capture data.

Appendix 1, documents the interview schedule and protocols followed. Establishing interview protocols, not only standardises the research conditions, but is recognised as helping participants to recall events (Leins et al., 2014). Overall interview protocols enhance the quality of the interviews (Lamb et al., 2007). Due to the Covid-19 pandemic, all interviews were conducted and recorded in Microsoft Teams. As an additional measure, the researcher also recorded handwritten notes.

To standardise the process, and to prevent post interview conversations that might introduce bias, or otherwise skew the findings, all interviews occurred sequentially on the same day.

The interviews were partitioned into five stages:

1. Introductory questions to put participants at ease

2. In-depth questions linked to research questions

3. Clarifications and link to theory

4. Concluding questions

5. Demographic profile information.

To ensure the validity of the interviews, each of the interview questions were mapped to the research questions and/or line of inquiry arising from the literature (Appendix 2). Permission was obtained from Pham (2018), to use a few questions from his original Vietnamese case- study, with little change, bar contextualisation. This includes his section 1 on “Impacts during the self-evaluation process” and Section 3: After being accredited,” (Pham, 2018).

Transcription of the MSTeams’ recording was made using Otter software (https://otter.ai) and exported to MSWord format. Transcriptions were then cross referenced back to the MSTeams’ recording to confirm their accuracy. Subsequently, each participant was asked to review and agree the resulting transcription.

Analysis

Qualitative thematic analysis was used to review participants’ interview responses. Thematic analysis is well respected. For example, it is recognised as not only a relevant and trustworthy method, but also well suited to discern the differences and similarities between participants’ perspectives (Nowell et al., 2017). Bélanger, et al., (2011) used thematic analysis, conceptual mapping and critical reflection to construct knowledge about decision making in medical care, based on 37 research papers.

Young, et al., (2020) six stage process helps to secure that thematic analysis is rigorous and trustworthy. Their approach has been adapted for use in this study. Van den Heuvel, et al., (2014) similarly adopted an inductive grounded approach. In addition, Van Leeuwen’s (2019) analysis of teachers’ perceptions used an integrated approach combining induction analysis of the raw data and a deductive reasoning informed through the theoretical lens of scaffolding. These, and other studies use a stepped approach to identify codes and themes of meaning. In this study, the stepped approach is linked to perceived enablers and inhibitors of CSER. As a result, this study has utilised Young, et al., (2020) process together with features of other studies, to build a phased analysis process, outlined in Table 3 below.

| Table 3 Phases Of Data Analysis |

|

|---|---|

| Phase | Activity |

| Phase 1: Data familiarization | Reading and re-reading of the transcripts to gain an overview of participants’ thoughts and experiences. As with Nowell, et al., (2017), an Excel spreadsheet was developed to record participants’ raw data. This was aligned with the corresponding interview questions, easing the flow of the iteration process and smoothing the comparability of participants’ responses discussed in Phase 2 below. In addition transcripts were analysed for key words using Nvivo 12 software. |

| Phase 2: Identifying ideas and creating initial codes | I used an inductive grounded approach to generate codes. This involved iteration: reviewing ideas to develop initial codes from the data exhibited and relevant to the research questions. |

| Phase 3: Identifying themes | Cresswell’s (2014) systematic approach was used to categorise ideas into themes reflecting the meaning of the participants’ thoughts and experiences. Ideas and codes were reviewed using comparative analysis to identifying recurring sub/themes and consolidated into themes by inductive reasoning. Themes communicate participants’ overarching experience by unitising, sometimes fragmented ideas, into consolidated thoughts. |

| Phase 4: Refining themes and subthemes | Iteration, by constant comparison method, was used revisit the data, moving back and forth from the codes to the data looking for similarities and differences. This enabled me to identify threads of meaning linked to the enablers and inhibitors of CSER and the theoretical lens of reflection to expose what may have shaped the participants’ perspectives. Coding key attributes into themes followed. During this stage, some less well substantiated themes were deleted, and others subsumed within themes for which the evidence more strongly substantiated the significance of the theme. |

| Phase 5: Finalising themes | Themes were reviewed and if necessary updated to improve articulation of their meaning. This enabled the finalising a nomenclature that quickly communicated meaning in the fewest number of words. |

| (adapted from approaches described in Young, et al., (2020); Van Leeuwen (2019); Nowell, et al., (2017) and Van den Heuvel, et al., (2014). | |

Table 4 shows the 195 key words used by participants to discuss their interaction with the CSER process and identified using Nvivo12 software.

| Table 4 Initial Keywords |

|

|---|---|

| Key Words Frequency | |

| Course | 18 |

| program | 17 |

| level | 17 |

| student | 16 |

| faculty | 10 |

| unit | 9 |

| semester | 7 |

| view | 6 |

| time | 6 |

| side | 6 |

| date | 5 |

| action | 5 |

| program level | 5 |

| report | 5 |

| self-evaluation | 5 |

| evaluation | 5 |

| assurance | 5 |

| part | 4 |

| sustainable | 4 |

| problem | 4 |

| thing | 4 |

| calendar | 4 |

| individual faculty members | 4 |

| effectiveness | 4 |

| satisfaction | 4 |

| next semester | 4 |

| point | 4 |

| plan | 4 |

| management | 4 |

| teaching | 4 |

| data | 4 |

| head | 4 |

| objectives | 3 |

| class | 3 |

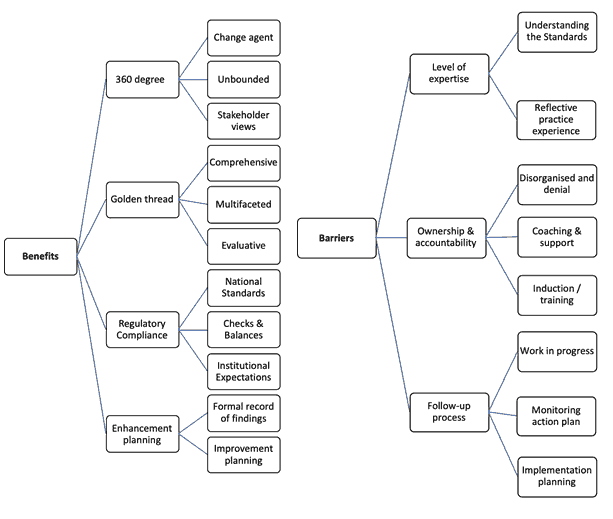

Two major themes emerged: benefits and barriers and associated subthemes (Figure 1). I performed further shortlisting analysis to reveal 34 key words. Additional iteration and analysis enabled the distillation into four categories identified in Table 5.

| Table 5 Third Level Analysis |

|

|---|---|

| Major themes | |

| Comprehensive Golden Thread | 9 |

| Regulatory Compliance | 9 |

| Enhancement Planning | 7 |

| 360-degree analysis | 3 |

Limitations

A limitation is the# insider research’ approach which carries the risk of skewing the data. The participants might have felt obliged to present model answers similar to those that they may give to external stakeholders, or otherwise inhibit the constructivist dialogue (Moodley & Gaigher, 2017). However, as I do not line-manage the participants, who are overseen by an Academic Dean, the risk is reduced. Also, as suggested by Nowell et al., (2017), credibility may have been strengthened if more than one research has analysed the data.

In addition, this single institution study limits generalizations; a feature noted in other studies (Adachi et al., 2018). A wider audience study would be needed to test these generalizable topics.

Research Findings

The participants’ perspectives of CSERs identify four key benefits and three barriers to their implementation. Interview time varied from 64 to 75 minutes. The variation enabled each participant to express their responses without being guillotined.

A thematic framework emerged from inductive reasoning. This conceptualises the relationship between participants’ responses and experiences and is presented in Figure 1 below.

Benefits

360 degree perspective

Participants valued the CSER as a change agent designed to monitor the effectiveness of program delivery. They regarded the CSER as an unbounded 360 degree, powerful multi- faceted evaluation tool. The instrument engaged both academic and non-academic stakeholders, such as student services, that is used in improvement planning. Most managers identified the importance of change to raise quality and to formalise the updating of pedagogical practices including syllabi and textbook.

Participant 1 stated:“if your program needs to have some sort of progression, that means then you need continuous feedback. So that's one of the reasons why the CSER has been introduced, where in I said 360-degree evaluation."

Participant 2 confirmed that: “..it’s more of a 360 degrees process, in the sense that you're not just dealing with yourself, as a professional here, you're dealing with the students, you’re dealing with the staff, you're attending to external stakeholders, I would say more consideration of a range of extraneous variables.”

Comprehensive ‘golden thread’

All participants initially regarded the CSERs as ‘tedious’ to complete the 26 metrics compared with the previous seven instructor tick-box questions. This aligns with Macfarlane,& Gourlay (2009) findings initial denial. However, their perceptions have transitioned to regard the CSERs as a comprehensive evaluative tool; a ‘golden thread’ and reflection from common ground, linking the course together, such as to determine the achievement of course learning outcomes.

Participant 2 describing his journey of perception from ‘tedious’ to ‘comprehensive’ ‘golden thread’ said: “The first time I saw it, the first word that popped into my mind was ‘tedious’.” Subsequently, transitioning his understanding, he stated that it evaluates the program in a ‘comprehensive manner,’so that ‘tedious became more of an impression of comprehensiveness.” He concludes: “ …without the salient features of the CSER… you will fail in addressing data information that you must address including from other stakeholders, to enhance the program …and that’s a golden thread.”

Participant 1 elucidated: “CSER is not just a document, it is an eye opener for a manager like me, that there are a lot of data I need to look into hooked into several units inside ADSM that then create an impetus of change in the process.”

Regulatory compliance

The CSER are used to demonstrate course and program adherence to national standards and in documenting the quality of delivery. It provides a vehicle of ‘checks and balances/that validate and verify performance and indicating how well faculty have met institutional expectations.

For example, Participant 2 correlated the CSER with the need to demonstrate compliance with the CAA 2019 Standards: “it is meant for compliance purposes, you know, assurance quality assurance purposes” and “will have very good standing in terms of accreditation with the CAA and in compliance with MoE in general”.

Likewise participant 3, correlated a strong link to regulatory requirements stating:

“it's down to quality assurance in delivering of the courses in line with the accreditation requirements…we are looking at CAA standards …, then we need to make sure that the course delivery, the course assessment and the students itself learning objectives are actually fulfilling all the standards.”

Enhancement Planning

High levels of satisfaction with the evaluative tool. Course and program CSERs provide a formal record of findings that informs improvement action planning. Some of these changes may be immediately implemented and others may require longer term milestones.

Broader understanding of the purpose of CSER is shallow: just to complete course files. For example, participant 1: stated:“Basically, it's for programme enhancement; to identify the gaps and benchmark themselves; what they are doing good, what they are not doing well, and the areas of improvement.”

Participant 2: states “when we put forward a report, …we give it substantial recommendations, we draw important information together in what we need to do to embark on in terms of improvement and improvement plans” and that CSERs help to “really pin-point essential points of improvement.” Furthermore, “it is a much bigger scientific tool, a better agent of change as an academic manager and strategically important in the diagnosis of performance.”

Participant 3: similarly stated: “these kinds of potentials are opportunities for improvement, or gaps that needs to be addressed; has to be addressed, hopefully before the next delivery, whichever the possibilities arises if it can be addressed immediately, then it will be addressed. If it's not, then it could be part of a strategy in the future."

Participants’ satisfaction levels with the CSER are documented Table 6 below. Overall, participants are positive about the CSER, but recognise more work is needed to further improve their effective use. In this, they demonstrate a degree of meta-reflexivity (Arch 2007) looking for ways to improve the instrument further, matched to their practices.

| Table 4 Satisfaction Levels With the Cser Evaluation Too |

||

|---|---|---|

| Participant 1 | Participant 2 | Participant 3 |

| 80% Identifies gaps perfectly well, but Covid pandemic has impacted progress with developmental reviews. | 80% A few aspects not captured by faculty effectively and need to strengthen stakeholder buy-in | 60% Team are still new to implementing the tool and still need to establish the baseline norms. |

Barriers

Level of expertise.

In agreement with Greenberger (2020) participants’ level of expertise impacted the effectiveness of their reflection. This includes challenges in understanding the Standards, objectives and how these are incorporated to the CSER. One participant, stated that this required overwhelming effort and was looking for ways to streamline and improve its balance of purpose. Interesting, participant 3 is the least experienced in self-evaluation and is also the least content with the process (Table 6 above). Typical of reflections, participant 2 recognised the “essential element of expertise” involved in challenging the veracity of facts and their interpretation, presented by his team.

Ownership and accountability

Faculty do not always see the value of the CSER and in denial, abdicate responsibility. At the course level, the majority of faculty were disorganised and required much coaching and support. Insufficient use has been made of orientation to the process and how to prepare to reflect on the detailed 26 metrics. One manager sort other mechanisms to monitor and action plan.

Typical of this theme is exemplified by Participant 1, who observed the behavioural dimension impacting the effective completion of CSERs. Disorganised faculty members needed much coaching, and the maintenance of support phone logs. These faculty did not see the benefits of CSERs and generally lacked any sense of ownership of the process of evaluating their own courses. Occasionally, this reflected a lack of orientation to the role and purpose of the CSER.

Unclear follow-up process

All participants recognised that the quality of CSERs was a ‘work in progress’ requiring further development. In particular, the next steps regarding monitoring action plans were not clear. One participant proposed that a detailed strategy was required to implement the problem-solving solutions. This included developing a symbiotic relationship to collaborate with other units such as the Curriculum Development Committee on the enhancement process and integrating milestones into the automated quality calendar. This suggests the need to extend the skills set required by academic managers, either on appointment or subsequent professional development. Similarly, participant 3: commented on the challenges of time management required monitor the implementation of action plans.

Reflection Classification Schema

Two out of three participants felt the process was mostly descriptive, with a minority of evaluative reflections, broadly equating to a 60:40 split. This substantiates Trowler et al., (2020) classification schema division into primarily descriptive and primarily reflective approaches. All participants found the CSERs successfully enabled them to identify problem areas. Furthermore, they gained illuminating insights that provided stimulus for improvement action planning. Nevertheless, responses are mostly descriptive, rather than reflective with a focus on problem-solving insights. In a few cases more ‘discernment’ in the information was needed. This aligns Trowler’s descriptive ‘quick-fix’ quadrant (Trowler et al., 2020)

Participant reported that the process initially starts descriptive and through a process of collaborative reviews, greater objectivity and reflection develops; particularly if collaborative reflexivity with faculty members and the Dean are utilised. This confirms Archer’s rationalisation that reflexivity is a mutable feature responding to participants’ “concerns and context” (Archer, 2007).

Reflection-on-action

Participants experience of reflective practice was dominated by Schon’s reflection-on- action. Reflection occurs retrospectively at the end of the course or program. One participant valued the insights arising from these ‘touch-points’ in action planning. For example, to improve retention rates, or changes to class sizes. They even proposed that CSER outcomes could be used to develop job descriptions and inform faculty evaluation and key performance indicators.

Reflection-in-action

Participant 1, recognised the desirability of on-going reflection, capturing the progressive experience to complete CSERs throughout the year. This would enable him to implement improvement actions quickly, to the benefit of existing students and building an effective community of stakeholders.

Table 7, documents the demographic background of each of the three participants. All have less than one years’ experience of using CSERs. Collecting the biographical profiles of participants has proven helpful in similar studies (For example: Adachi, et al., 2018; Moodley & Gaigher 2017).

| Table 7 Participants’ Profiles |

|||

|---|---|---|---|

| Participant one | Participant two | Participant three | |

| Nationality: | Indian | Philopeanian | Singaporian |

| Gender: | Male | Male | Male |

| PhD award year: | 2014 | 2008 and 2015 | 2003 |

| Education experience (years): | 5 | 21 | 21 |

| Program director experience (years): | 1 | 9 (including in other HEIs) |

6 (including in other HEIs) |

| Academic Rank | Associate Professor | Professor | Associate Professor |

Overall, reflection-on-action works well. For two out of three participants, this approach met their needs. They have identified strengths and weakness and developed a monitored time bound improvement action plan. Albeit at the quick-fix level (Trowler et al., 2020) Nevertheless, longer term sustainable action planning and embedding of the instrument requires additional input, particularly in training and supporting faculty to interpret the regulatory standards and the institution’s internal 26 metrics. Participant three, although positive overall, considered there to be a misalignment with the Standards and actual operational practices. This ‘denial’ reflects Pham’s (2018) findings. Practice showed that participants’ approaches aligned with Schon’s (1983) reflection-on-action method, limiting reflection to the end of the course, or year. Nevertheless, one participant promoted the benefits of reflection-in-action to generate evaluative judgements during the process of delivery. Reflection-on-action might enable academic managers to reduce bias via collaboration and to provide validation of the evidence presented.

The findings also have implications for the appointment of academic managers. As outlined in Table 7, participants’ experience ranged from five to 25 years in education and one to nine years as academic managers. Setting experience thresholds might raise managers’ familiarity with the Standards, management experience and quality assurance processes increasing their evaluative skills and broadening the range of action planning responses.

Participant three, identified several challenges to reflection relating to their dependency on other information and data owners. These included student services and IT units. Participants identified a variable amount of time required to gather evidence prior to completing the reflective exercise and that omitting to plan for this, was slowed progress. The custody of information often rested with other data owners. This suggests the need to adopt a whole organization approach to reflection. For example, by systemizing touch-point information and data flow reporting requirements.

This study affirms the value of Trowler’s (2020) work in identifying quadrants of reflective activity with participants’ approaches characterised as primarily descriptive within the quick- fix quadrant. However, greater impact from reflection may be obtained from raising performance to the action quadrant (Trowler et al., 2020). The penetrating reflection in this zone, requires skills development to move practice from descriptive to reflective, liberating the potential for the problem-solving insights that provides durability to the change process. Participants’ bias toward descriptive reflections, align with Greenberger’s (2020) findings of a need to develop competencies of reflective practice, and in establishing an institutional culture of the reflective practitioner (Schon, 1983). In this scenario, managers coach and mentor their subordinates to develop their reflective skills. In so doing, migrating practice from primarily descriptive to primarily reflective (Trowler, 2020). To support this the quality assurance unit might develop a cycle of periodic training to develop reflective practice skills (Schon, 1983).

Discussion

The study was conducted through the theoretical lens of reflective practice. This included Schon’s reflective practice (1983), Trowler’s (2020) classification schema and Archer’s modes of reflection (2007).

Semi-structured interviews with academic managers were analysed via iteration, to find ideas and meaning, and then coded into categories. Overall academic managers welcomed the CSER tool and valued the 360° scope in providing a though and thoughtful range of metrics that strengthened the evidence for demonstrating meeting the Standards. It fits expectations as an effective internal predecessor, to external evaluation (Kovac, 2012).

Schon's Reflective Practitioner Model (1983) and Trowler"s (2020) reflection classification schema ‘quick-fix quadrant’, provide a helpful overarching understanding of participants’ reflections. Participant’s approach to reflection closely mirrors Schon’s (1983) stages outlined in Figure 2;

The Reflective Journey

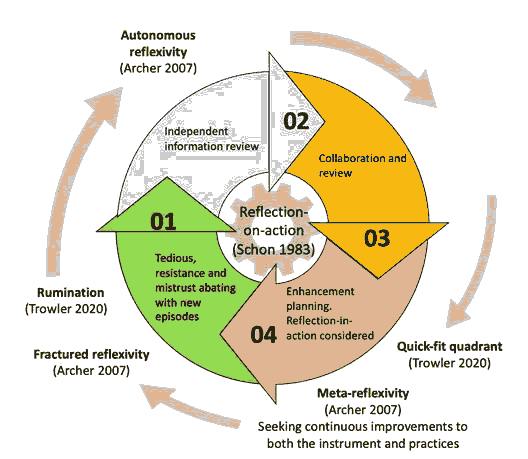

A careful analysis of participants’ reflective approaches provides insight to their ‘reflective journey’. Participants’ reflective practices align well with models of reflection including Schon (1983), Trowler et al., (2020) & Archer (2007). This is illustrated in Figure 3 below.

Figure 3: Participants’ Reflective Journey Aligned With Models Of Reflection Available In The Literature.

Participants primary use reflection-on-action (Schon, 1983). Episodes of performance are reviewed at the end of each course (term) and academic year. However, reflective practices are mostly descriptive. This provides a degree of insight enabling surface-deep ‘quick-fix’ solutions (Trowler, 2020), even ad hoc solutions (Archer, 2007). Additionally, this aligns with

Greenberger’s (2020), observation that reflection leads to both improved decision-making and improvement action planning.

However, participants’ reflections do not provide the insights that Trowler, et al., (2020) identify arising from deep problem-solving reflection and that themselves inform sustainable solutions. Improving participants’ reflective skills, may increase insight and enable sustainable solutions. Thus, aligning practice with the powerful ‘action quadrant’ of Trowler’s schema (2020).

Archer’s model (2007) provides additional understanding of participants’ journey of reflections to complete their CSERs. As similarly noted by Macfarlane & Gourlay (2009), participants initially found the process ‘tedious’ becoming fogged by the size of the task and extraneous variables. In this they demonstrated ‘fractured reflexivity’ (Archer 2007), making slow progress. Trowler, (2020) recognises that such ‘rumination’ may lead to anxiety and depression without obtaining sufficient problem-solving insights (Trowler et al., 2020) thus inhibiting the process. Similarly, Pham (2018) documents resistance and mistrust by academics to newly introduced processes. This affirms Mårtensson’s (2014) rationalisation that the conjunction of quality assurance requirements and actual practice is symptomatic of an organisation’s structure and aims. The ‘tedious’ perspective reflects Mårtensson’s (2014) finding that academic scepticism can underpin perceived separation of quality assurance and academic life.

However, with further episodes, ultimately leading to participants’ valuing the process as a 360 degree multi-faceted improvement tool, they demonstrated an autonomous reflexivity (Archer, 2007). This was seen particularly in gather preparatory information and documents together to make provisional judgements. This progressed into communicative reflexivity (Archer, 2007) and viewed as important to ensure a quality outcome. For example, through the process of both confirming and validating observations and collaborating to obtain others’ perspectives. This affirms the changeable nature of reflexivity aligned with Archer’s (2007)‘context and concerns’.

Two out of three participants proposed moving the process from reflection-on-action to reflection-in-action (Schon, 1983). They believe this would help faculty improve the process of continuous course reflection and provide intervention opportunities without waiting for implementation through the annual program CSER. This is also promoted by other researchers to enhance quality (Schmutz & Walter, 2018).

Similarly, practitioners’ meta-reflexive skills (Archer, 2007), show in their ambition to further improve the program level tool in collaboration with information owners. One participant found the CSER meets an immediate problem in demonstrating adherence to the Standards. Nevertheless, he identified the need to improve the process, particularly in the collaboration with other information owners.

Overall participants are ambitious to develop a practice-oriented model and align operational practices with regulatory requirements (Mårtensson’s, (2014). This affirms Mårtensson’s (2014), rationalisation that the conjunction of quality assurance requirements and actual practices is symptomatic of an organisation’s structure and aims.

As discussed previously, there are a number of theories and approaches to reflection such that the practice may be best described as ‘emergent reflexivity’ (Kovac, 2012). These include, but are not limited to: Holland’s (2016) four levels of emergent reflectivity; Archer’s (2007) four levels of reflexivity; and Trowler, et al., (2020) reflective practice schema. Future research to harmonise approaches and classification systems may lead to a useful unifying theory of reflexivity.

Conclusion

The significance of this study surpasses just academic managers’ use of reflective practice to complete critical self-evaluation reports (CSER) in universities. The study demonstrates how skilful examination of the reflective practice tools available in the literature, may be used to appraise, deconstructed and synthesise innovative improvement to self-evaluation instruments. Thus, the study has meaning and importance through extending their usefulness outside the range of university operations into the wider educational sector and enabling an enhanced systematic approach to quality improvement.

As suggested by Kovac (2012), CSERs provide an internal quality system in preparation for external regulatory evaluation of an HEI’s quality and performance. Furthermore, it documents the need for faculty to develop and extend their reflective skills.

Findings show that reflection-on-action (Schon, 1983) characterised how managers evaluate courses and programs when they have finished. Interviews with participants illuminated four benefits of the CSER (360 degree improvement tool, regulatory compliance and enhancement planning) and three barriers to its implementation (interpreting the Standards, ownership and accountability; and unclear follow-up process).

Insufficient use is made of deep reflection, recognised as desirable for effective action planning (Trowler, 2020), and which might enable enhanced insights leading to sustainable problem-solving actions. Instead, participants reflections hovered on descriptive, but nevertheless enabled quick-fix action planning (Trowler, 2020).

Trowler’s (2020) reflective practice classification schema, can be used effectively to profile academic managers’ reflections. Furthermore, it provides potential target setting opportunities that may be used to improve practitioners' reflective skills and move performance from the ‘quick-fix’ into more impactful ‘action’ quadrant.

Participants do not use reflection-in-action (Schon, 1983). However, this emerged as a potential alternative model due the nature of its continuous self-evaluation and improvement opportunities. This would capture contemporaneous perspectives. Participants suggested benefits might arise from the continuous review model and opportunity for greater use of collaborative reflection, with information owners and assembling snap-shot evaluations throughout the year.

Future Research

Further research should be conducted by expanding the study to multiple institutions and reviewing the impact of reflective practice has on the internal quality assurance processes native to these institutions. This should be followed by developing best practice models and frameworks potentially scaffolding quality enhancement opportunities through skilfully designed faculty professional development programs.

Researchers approaches to the internal conversation are inconsistent, lacking uniformity. The has spawned a large number of classifications and schema that are an opportunity to review and assemble the constellation of approaches into a unifying theory.

Acknowledgement

The author acknowledges the support of academic staff on the Doctoral Programme in Higher Education Research, Evaluation and Enhancement at Lancaster University from which this publication has arisen. http://www.lancaster.ac.uk/educational- research/study/phd/phd-in-higher-education-research,-evaluation-and-enhancement/

References

- Adachi, C., Tai, J.H.M., & Dawson, P. (2018). Academics' perceptions of the benefits and challenges of self and peer assessment in higher education. Assessment and Evaluation in Higher Education, 43(2), 294-306.

- Archer, M.S. (2007). Making our Way through the World. Cambridge: Cambridge University Press.

- Baartman, L., Gulikers, J., & Dijkstra, A. (2013). Factors influencing assessment quality in higher vocational education. Assessment and Evaluation in Higher Education, 38(8), 978-997.

- Bélanger, E., Rodríguez, C., & Groleau, D. (2011). Shared decision- making in palliative care: A systematic mixed studies review using narrative synthesis. Palliative Medicine, 25(3), 242-261.

- CAA (2019) Standards for institutional licensure and accreditation. MoE.

- Cameron., Andrea, J., & Mackeigan, L.D. (2012). Development and pilot testing of a multiple mini-interview for admission to a pharmacy degree program. American Journal of Pharmaceutical Education, 76(1), 10.

- Cresswell, J.W., (2013). Qualitative inquiry & research design. Choosing among the five approaches. 3rd Edition. Sage.

- Dikko, M. (2016). Establishing construct validity and reliability: Pilot testing of a qualitative interview for research in Takaful (Islamic Insurance). Qualitative Report, 21(3), 521.

- Farrell., Thomas, S.C, & Kennedy, B. (2019). Reflective practice framework for TESOL teachers: One teacher"s reflective journey. Reflective Practice, 20(1), 1-12.

- Greenberger, S.W. (2020). Creating a guide for reflective practice: Applying Dewey's reflective thinking to document faculty scholarly engagement. Reflective Practice, 21(4), 458-472.

- Holland, R. (2016). Reflexivity. Human Relations (New York), 52(4), 463-484.

- Kovac, T. (2012). The model of self-evaluation in higher education. Economic and Social Development: Book of Proceedings, 59.

- Lamb, M.E., Orbach, Y., Hershkowitz, I., Esplin, P.W., & Horowitz, D. (2007). A structured forensic interview protocol improves the quality and informativeness of investigative interviews with children: A review of research using the NICHD Investigative Interview Protocol. Child Abuse & Neglect, 31(11), 1201-1231.

- Leins, D.A., Fisher, R.P., Pludwinski, L., Rivard, J., & Robertson, B. (2014). Interview protocols to facilitate human intelligence sources' recollections of meetings. Applied Cognitive Psychology, 28(6), 926-935.

- Macfarlane, B., & Gourlay, L. (2009). The reflection game: Enacting the penitent self. Teaching in Higher Education, 14(4), 455-459.

- Mårtensson, K., Roxå, T., & Stensaker, B. (2014). From quality assurance to quality practices: An investigation of strong microcultures in teaching and learning. Studies in Higher Education (Dorchester-on-Thames), 39(4), 534-545.

- Matthews, N. (2008), Conflicting perceptions and complex change: promoting web- supported learning in an arts and social sciences faculty. Learning, Media and Technology, 33(1), 35–44.

- MoE (2020), Framework for the Compliance Inspection of Higher Education Institutions. Ministry of Education.

- Moodley, K., & Gaigher, E. (2017). Teaching Electric Circuits: Teachers " Perceptions and Learners "Misconceptions. Research in Science Education, 49(1), 73-89.

- Nguyen, T.T.K., & Chun-Yen, C., (2020). Measuring teachers "perceptions to sustain STEM education development. Sustainability (Basel, Switzerland), 12(4), 1531.

- Nowell, L.S., Norris, J.M., White, D.E., & Moules, N.J. (2017). Thematic analysis. International Journal of Qualitative Methods, 16(1), 160940691773384.

- Pham, H.T. (2018). Impacts of higher education quality accreditation: A case study in Vietnam. Quality in Higher Education, 24(2), 168-185.

- Rodger, D., & Stewart-Lord, A. (2020). Students "perceptions of debating as a learning strategy: A qualitative study. Nurse Education in Practice, 42, 102681.

- Russo, J., & Hopkins, S. (2019). Teachers "Perceptions of Students When Observing Lessons Involving Challenging Tasks. International Journal of Science and Mathematics Education, 17(4), 759-779.

- Schon, D. (1983). The reflective practitioner how professionals think in action. New York: Basic Books.

- Schmutz, J.B., & Eppich, W.J. (2019). When I say … team reflexivity. Medical Education, 53(6), 545-546.

- Trowler, V., Allan, R.L., Bryk, J., & Din, R.R. (2020). Penitent performance, reconstructed rumination or induction: student strategies for the deployment of reflection in an extended degree programme, Higher Education Research & Development, 39(6), 1247-1261.

- Van den Heuvel, M., Au, H., Levin, L., Bernstein, S., Ford-Jones, E., & Martimianakis, M.A. (2014). Evaluation of a Social Pediatrics Elective. Clinical Pediatrics, 53(6), 549-555.

- Van Leeuwen, A. (2019). Teachers "perceptions of the usability of learning analytics reports in a flipped university course: When and how does information become actionable knowledge? Educational Technology Research and Development, 67(5), 1043-1064.

- Watts, S.W., Chatterjee, D., Rojewski, J.W., Shoshkes R., Carol, B., Tracey, G., Kathleen, L, . . . & Ford, J.K. (2019). Faculty perceptions and knowledge of career development of trainees in biomedical science: What do we (think we) know?. PloS One, 14(1), E0210189.

- Young, C., Roberts, R., & Ward, L. (2020). Hindering resilience in the transition to parenthood: A thematic analysis of parents' perspectives. Journal of Reproductive and Infant Psychology, 1-14.

- Zou, Y., Du, X., & Rasmussen, P. (2012). Quality of higher education: Organsisational or educational. Quality in Higher Education, 18(2), 169-184.