Research Article: 2021 Vol: 27 Issue: 3

Machine Moderators in Content Management System Details Essentials for Iot Entrepreneurs

Wahiduzzaman Khan, Ahsanullah University of Science and Technology

Alim Al Ayub Ahmed, Jiujiang University

Siddhartha Vadlamudi, Xandr

Harish Paruchuri, Anthem, Inc.

Apoorva Ganapathy, Adobe Systems

Abstract

The need for Content moderation is indispensable as publishers can't allow inappropriate or illegal content to be published through or on their platforms. Also, every publisher has the responsibility of checking works to ensure that the content of the results is ethical for readers, and viewers can get clean and explicit content. Machine moderators may be the all-important response to the growing need for moderators. The push for machine moderators is often justified as a necessary response to the scale of work supposed to be edited. The use of machines, Artificial Intelligence (AI) will make content editing faster and possibly more accurate than human moderators. This work looks into the meaning of content moderation, how machines are used for content moderation and the advantages of using machines in content moderation. It also highlights the significant innovations in the use of machine moderators in content management. The simulation of Human Intelligence in machines programmed to think like humans and mimic their actions is referred to as artificial intelligence (AI). The term may also be used for any machine or computer that exhibits characteristics resembling the human mind, such as problem-solving and learning. The optimal feature of artificial intelligence is defined as the ability to rationalize, learn and take actions that have the highest potential to be most beneficial for the intended goal and objectives.

Keywords

Machine Moderators, Content Management, Artificial Intelligence, IoT Entrepreneurs.

Introduction

Content creation has become a very burgeoning venture for contemporary peers of the 21st century, maximizing the enormous resources afforded by the Internet to unleash their potentials. It is a fact that currently, the Internet is reputed to have over 4.5 billion users who generate zillions of images, videos, messages, and other forms of content daily for public consumption. It became pretty imperative that this avalanche of contents pumped into space has to be regulated somehow, because the users of the internet would want to safely visit these platforms (social media platforms or online vendors) to not have a negative experience. In search of a solution, content moderation was found (Aggarwal, 2018). Content Moderation is viewed as a unique technique of observing and applying the laid down set of rules and principles to user-generated intellectual inventions to determine whether or not the information (a particular) is allowed and if it is worthy of public consumption (Ahmed, 2020). This technique appropriately removes any data perceived as too obscene, vulgar, fake, fraudulent, harmful, or not reader-friendly.

Authors and academic writers alike have traditionally relied on people to engage in a very manually intensive review of their articles. This manual form of content moderation is a daunting task that is both capitals expensive and time-consuming and largely not very efficient (Koetsier, 2020). Most organizations involved in intellectual publications with the growing demands on their radar are investing in deep learning (DL) techniques to make algorithms that can manage content automatically without having to be solely dependent on human editors (Azad et al., 2021).

This article opines that effective content moderation is requisite of full and reflective considerations on critical public issues and reader-friendly devoid of grammatical errors and poor sentence construction. The alternative use of machine moderators for content moderation, primarily academic articles, is seen as a desirable development, primarily because of the guaranteed speed, accuracy, efficiency, and effectiveness. A set of ground rules are just needed to be fed into the machine, including ones relating to auto-correct, sentence construction, punctuations detection, and vulgar-freeness. However, the adoption of machine moderators does not eliminate the human factor but rather ensures more proficiency in ensuring an error-free intellectual article. This human presence provides technical support, especially when these errordetecting machines begin to malfunction or wear out either due to poor maintenance or the fact that a replacement is imperative. They also feed into the machines the appropriate set of instructions to work upon. No two intellectual contents are the same, so the content moderating devices must be designed to appropriately attend to each content's uniqueness. This paper appreciates the imperfections of human content editors. Consequently, it advances that the usage of Machine Moderators is most effective in the management of educational content that will be put out for public consumption, especially due to its detailed word-for-word approach leaving no stone unturned.

What Is Content Moderation?

The beauty of any content is determined mainly by the extent to which it meets particular standards within the very community of its consumption. Every community where content, whether academic, religious, musical, or video content, is to be published ordinarily has an acceptable threshold of what the language coaching should be like, the quality of the information, and even the correctness of its structural sentence. When all these common assessment factors are considered, then the necessity for either a manual or automated content moderation cannot be over-emphasized. Content moderation is the skillful monitoring and regulation of user-generated posts or publications through infusing a set of defined rules and guidelines. This technique involves the management of different content not limited to just internet posts, but also including written scholarly works, videos, and pictures following an already pre-determined set of rules and regulations, which produces the desired results for the content moderator or editor (Vadlamudi, 2021a). These pre-determined rules for activating the content moderation process may include other requirements, such as making sure the content is in line with copyright laws, and internal requirements, such as making sure posts is in line with the terms and conditions of the platform or that a written publication is plagiarism-free and devoid of errors. Both people and machines can be effectively used to manage content with a lot of details (Paruchuri, 2019). How most organizations elect to keep close tabs on consumer activities is subjective to the different types of social media platforms, digital communities as it relates to content management, meaning that the aim is, to ensure non-derogation from the quality of information they intend to convey. Obviously, from text-based content, adverts, images, profiles, and videos placed in forums, online communities, social media pages, and websites, the goal of most content moderators and the type of content moderation strategy they wish to optimize is to maintain brand credibility, trustworthiness and security for businesses and their followers whether online or not (Suler, 2004). Enterprises themselves can also manage content. For instance, a platform can give a person the job of moderating the comments on a blog or website. Notably, huge platforms, such as Facebook, have entire units dedicated to monitoring the content on their website and always drive home narratives that promote their overall interests while keeping clients and visitors engaged. Content moderation is essential because it involves making sure content adheres to local, national, and international law as well as the desired standards. The use of double–checkers in most blog articles has enabled most bloggers to avoid expensive and career-threatening copyright lawsuits.

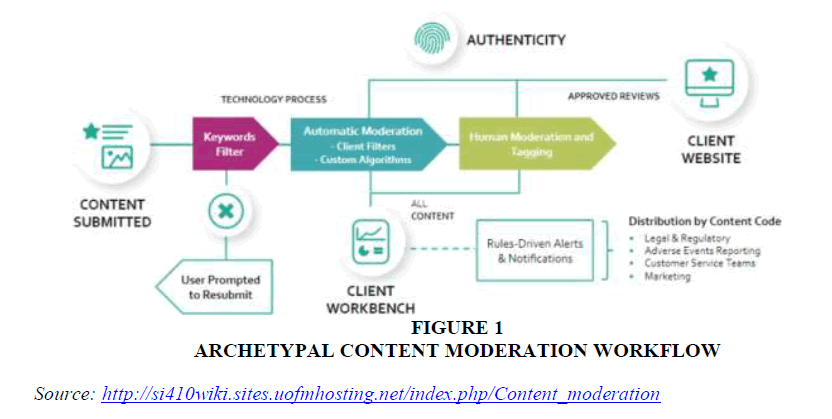

Interestingly in the absence of content moderation, there will be no restrictions on content that can be published and users can publish anything they like without consequences (most people believe that the automatic content moderation on websites is an infringement on their right of expression). Content moderation is mainly done internally and externally. For example, an academic researcher in Harvard Business School may choose to delegate the task of responding to reviews and comments regarding a business strategy nuggets on his online platform to an employee (Ahmed et al., 2013). Figure 1 shows the archetypal content moderation workflow. If a response doesn't adhere to the company's terms and conditions, it will be removed. Social media giants Twitter uses this content moderation technique to maintain the desired sanity, which involves pulling down fake, spurious, and unfounded stories, inciting and obscene resources on its platform.

Figure 1: Archetypal Content Moderation Workflow

Source: http://si410wiki.sites.uofmhosting.net/index.php/Content_moderation

What Are Machine Moderators?

This means that programmed machines deal with user-generated content through the platforms rules and guidelines that have been fed into the computer. User-generated content maybe accepted, deleted or even sent to a human moderator automatically based on the platforms policy by machine moderators. Machine moderators provides an excellent solution for internetbased platforms that want to ensure that quality user-generated content is given optimum priority (Paruchuri, 2021). Users of such platforms are safe when interacting on their sites. Due to the many lapses and imperfections associated with the traditional method of content editing or moderation, which ultimately affects valuable economic time, machine moderation has become very popular, especially in the 21st century, all thanks to the digital age. Accordingly, Microsoft conducted a very prominent study and discovered that humans, on average, stay attentive for just eight seconds. Therefore, authors, writers, media platforms, and content creators, in general, cannot bear to have a slow-to-site of the user-produced content, or they may risk losing their users. However, users who are faced with substandard quality, inappropriate content, scam, etc., are more likely to leave the site immediately. So what does that mean for us? For content creators not to compromise their time-tested standard quality, they may need to consider using machine moderators or Artificial Intelligence (AI). Some content moderation comprises groups of technically skilled people who ensure that the content maintains the proper sentence structure, desired context, originality, and error-freeness. In other cases, moderation may rely on machines (Artificial intelligence). Manual moderating by humans of content is the most effective strategy in providing personalized replies to comments and reviews online. Still, the time taken and the number of people needed to carry out the effect are pretty consuming and alarming. For large websites that publish and maintain a lot of content, AI is essential. Facebook and YouTube use AI to detect content based on certain words, phrases and whether the description aligns with what is published. Content when in stark may default and can be flagged immediately and automatically deleted from the site or highlighted and erased in the case of an academic article written. Howbeit, machine moderation seems to work best when used in combination with rapt attention from human moderators. There is a considerable limit to what a machine can do. For instance, algorithms cannot identify the touches of sarcasm and ironies in a post, which might not tally with the content creator’s intention. While an AI may flag a piece of content, an actual human being may determine whether or not the content should be removed. This will amount to a symbiotic utilization of the available methodologies of content management details. The Machine Moderator also has an Artificial Intelligence moderation feature that enables it to learn models built from online platform-specific data to efficiently and accurately catch unwanted user-produced content which does not meet the standard guidelines for acceptance. A machine moderation solution will take very accurate automated moderation decisions.

What Contents Get Moderated?

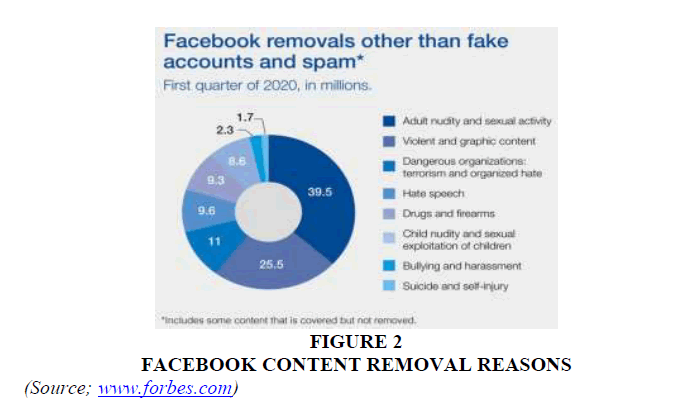

It is pretty essential to get into the nitty-gritty. Some content are key target of content moderation management systems on all fronts. Ordinarily, content is managed in two ways – either through warning before submission of suspected abusive content or deleting, and rejection (in the case of academic articles), and punishment after the fact (especially where it is perceived that the warnings were ignored deliberately). Figure 2 shows why Facebook content removes? The contents that most moderators would harshly scrutinize are the following:

Figure 2: Facebook Content Removal Reasons

(Source; www.forbes.com)

Abusive Content

Some contents are aimed at harassing and abusing specific persons or groups of people online. This content category is abusive content, behaviors such as hate speech, racist remarks, cyber-bullying, and content that incites violence. Social disturbances are sometimes challenging to define, and most publishing platforms have made efforts to recognize them automatically using AI and content management systems (Schomer, 2019). Several cyber-bullying and attacks on social media have affected several persons in terrible ways. Some have unfortunately led to self-harm and even suicide. Media companies such as Facebook, Instagram, and Twitter have been pressured to add reporting options and high-level content management systems to detect abusive content. The majority of moderating machine systems uses a combination of image and natural language processing along with social network analysis.

Fake / Misleading content

Some contents are fake and intended to mislead the consumers. This category deals with false and misleading content published on social media networks to aid hasten the spread of incorrect and inaccurate information. These are usually news and articles. The phrase "fake news" has become a norm in the political world. Identifying fake news presents a difficult task for human moderators as it is nearly impossible to check the authenticity of each article posted manually. Currently, content management systems use techniques such as combining a huge common-sense knowledge base, incorporating multiple reputation-based factors and natural language using social media network examination and technical elements.

Nudity / Explicit content

Contents could be inappropriate and offensive for consumers. Sexually explicit content and nudity are usually very harmful to consumers. Machine moderators use image processing techniques to detect this category of content. Different platforms allow a certain level of nude or sexually explicit content. For example, Facebook and Instagram have zero tolerance and do allow such content. While Reddit does not have any restrictions on them and allows for all legal cases. Recently, Tumblr changed its policy as it does not allow pornography and most nudity on its platform.

Inciting Violence and Racism

Any content which has manifested sufficient evidence of its capabilities to incite violence between identifiable communities is out rightly rejected and appropriate sanctions meted out. Whether graphically, text-crafted, or video authored, provocative racial publications are treated by most content moderators as a great unpardonable sin. Most content moderators like the ones used by Twitter and Facebook go to the extent of banning the creator of such highly inflammable content; a typical example is Donald Trump's Capitol Hill violence incitement posts on Facebook and Twitter, leading to his outright ban on the platforms.

Scams, phishing, and hacking

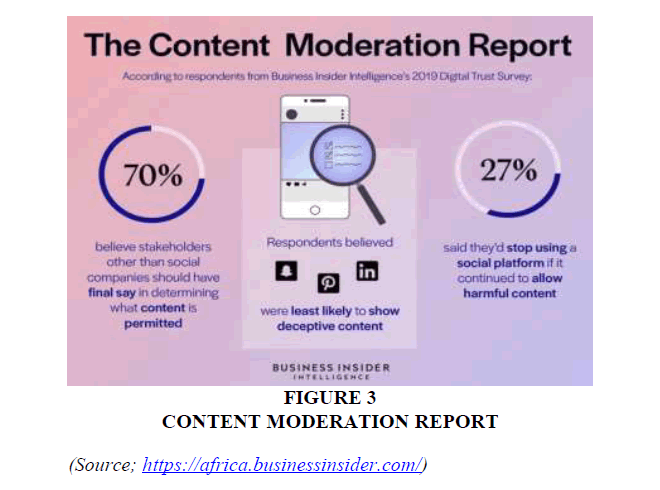

This category of content is intended to deceive and scam the consumers. Platforms prohibit this type of content and remove them from content management for protecting users of their platform. Figure 3 provides, content moderation report, this type of content will usually get users to go away from the platform and use a different platform. The external platform would try to scam the user using their personal information or call to send money to an accidental party (Ahmed et al., 2021). The technique mostly is to copy the URLs on the original, which can be done using hemolymph attacks or giving the user an attractive deal, usually better, on some purchases using the platform. This type of content maybe is identified through 'natural language processing, reputation based factor using social network examination, and gathering is known phishing links and accounts.

Figure 3: Content Moderation Report

(Source; https://africa.businessinsider.com/)

Significant Advantages of the Use. Machine Moderators

Research shows that the amount of data uploaded to the Internet every day is a mindblowing 2.5 quintillion bytes. A group of human internet gatekeepers that is the moderators working at top speed may only handle going through just a tiny amount of the user-generated content (Vadlamudi et al., 2021). It takes just a minor slip up or likely error for a human Moderator's reputation to be tarnished. Continuous publishing of harmful content on a platform due to human error or negligence can put users and viewers at risk in the long run. Human error and oversight are likely to occur as moderation is a tasking job for humans. Going through many hateful and damaging content can take its toll. Machine moderators can help resolve this. Machine moderators may be taught true machine learning (to be discussed later) to identify specific patterns of content and certain words. For instance, where a platform is trying to reduce profanity, adult content, sexual language, violence, bullying, racism, spam, or fraud, the machine moderators (AI) can learn to detect this type of content. By examining what human moderators seem harmful, AI moderators understand and learn good examples of what is and what not acceptable content on the platform is. The machine moderators know and get smarter every day as technological advancement increases. Through machine learning, the AI gets better at recognizing specific words and the context of those words. While emotions behind the content remain better suited for humans to identify, AI and humans can work hand in hand to monitor content effectively.

Downsides to Machine Moderators in CMS

The potential of machine moderators to give rise to job losses. The use of AI in the moderation contents would lead to loss of jobs for human content moderators as more work would be done at a shorter time by fewer people working with an AI. Also, the likely algorithmic bias flowing from humans in the database. While the above downsides should not be ignored, it is worthy of note that advances in machine moderation can create better business and better lives for everyone (Paruchuri et al., 2021). If correctly implemented, artificial intelligence has immense potential.

Advances in Machine Moderators and its Potential Future Impact

The recent progress in machine moderators has been driven mainly by machine learning. Machine learning allows a computer system to make decisions and, through calculations using algorithms, make predictions of outcomes without being programmed for the tasks. For this approach to work, a set of data or a training environment for the system to experiment would be needed. Deep neural network," which is a breakthrough development for machine learning in recent times, has enabled "deep learning" for machines. The neural networks allow the computer system to recognize complex data (Speech, images, and so on). This advancement brings the quality of performance of these computer applications in delivering specific tasks. They have been training to a level that now compares to humans even though there may be some errors.

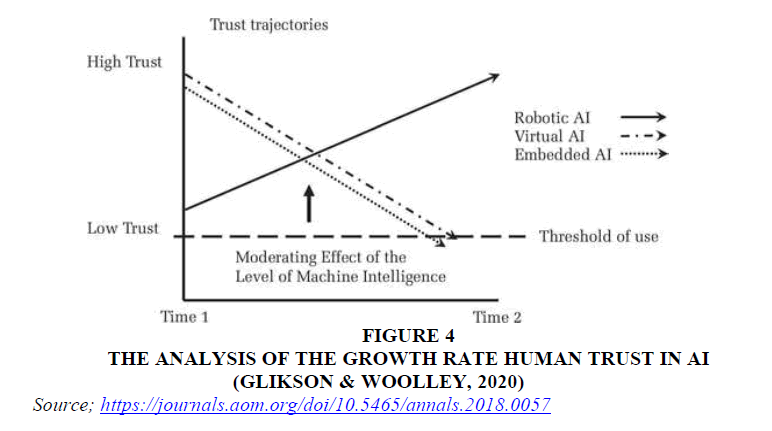

Like most technologies, machine moderators and AI-driven progress in algorithm development would continue to advance in the coming years. This development may be hampered by factors such as; the lack of transparency of some algorithms that may not fully explain the reasoning behind their actions and decisions—also, the low level of trust in moderating machines and AI by the society (Ganapathy, 2015). There is more trust in humans handling complex tasks compared to belief in computers. Also, there seems to be inadequate qualified personnel for developing and implementing machine moderators and AI. Glikson & Woolley (2020) presented the analysis of the growth rate human trust in AI (see: Figure 4). With technological advancement, trust and confidence in AI and machine moderators will increase as it is seen performing tasks well, and it is used in many other aspects of our lives.

Figure 4: The Analysis of the Growth Rate Human Trust in AI (GLIKSON & WOOLLEY, 2020)

Source; https://journals.aom.org/doi/10.5465/annals.2018.0057

The Potential Impact of Machine Moderators on CMS

Machine moderators (AI) may likely play a crucial role in content management system through the following ways:

a) Machine moderators may be used in the pre-moderation stage to flag content for review by humans. This would increase moderation accuracy and improve the pre-moderation stage. Techniques such as "hash matching," where the data to be reviewed, run through a database of known harmful data. E.g., the fingerprint of an image may be collected and compared with a database of known harmful images. Another technique used is “keyword filtering,” where words and phrases with potentially harmful meanings are used to flag content. Though they have a tremendous impact on content management by AI moderators, there are limitations (Sharma, 2020). Limitations such as detecting languages, symbolic speech such as sarcasm, and emojis become problematic as particular. Slangs change. The earlier discussed recurrent neural networks can allow for more advanced examination of content, especially those challenging to moderate. “Metadata” encodes some data essential to moderation decisions about content, such as user’s history on the site, the number of friends or followers, and other information about the user’s actual identity.

b) Machine moderators can be used to synthesize training data. This would help improve the premoderation performance. Generative AI techniques such as “generative adversarial networks (GANs). This technique creates images of harmful content, which may be used in place of the existing types of harmful content when teaching machines (Vadlamudi, 2020a).

c) Machine moderators can help manual moderating by increasing their productivity and reducing the potentially harmful effects of content management on humans. A machine moderator reduces the workload and amount of damaging content human moderators are exposed to, reducing the detrimental impact of content management on the human moderators (Ganapathy, 2016). The machine moderators can increase the effectiveness of individual moderators by prioritizing contents that they should review based on the level of harm they may cause. An AI may also reduce the issues of language in content management by providing high-quality translation. In using an automated content management system, the productivity of individual moderators will increase, and the side effects of viewing damaging content can be reduced (Zhu ei\t al., 2021).

Significant Advantages of the Use. Machine Moderators

Research shows that the amount of data uploaded to the Internet every day is a mindblowing 2.5 quintillion bytes. A group of human internet gatekeepers that is the moderators working at top speed may only handle going through just a tiny amount of the user-generated content (Vadlamudi, 2021). It takes just a minor slip up or likely error for a human Moderator's reputation to be tarnished. Continuous publishing of harmful content on a platform due to human error or negligence can put users and viewers at risk in the long run. Human error and oversight are likely to occur as moderation is a tasking job for humans (Amin & Vadlamudi, 2021). Going through many hateful and negative content can take its toll. Machine moderators can help resolve this problem. Machine moderators may be taught through machine learning (Vadlamudi, 2020b) to identify specific patterns of content and certain words. For instance, where a platform is trying to reduce profanity, adult content, sexual language, violence, bullying, racism, spam, or fraud, the machine moderators (AI) can learn to detect this type of content.

By examining what human moderators see harmful, AI moderators understand and learn good examples of what is and what is not an acceptable content on the platform. The machine moderators learn and get smarter every day as technological advancement increases. Through machine learning, the AI gets better at recognizing specific words and the context of those words (Donepudi et al., 2020). While emotions behind the content remain better suited for humans to identify, AI and humans can work hand in hand to monitor content effectively.

Downsides to Machine Moderators in CMS

The potential of machine moderators to give rise to job losses. The use of AI in the moderation contents would lead to loss of jobs for human content moderators as more work would be done at a shorter time by fewer people working with an AI. Also, the likely algorithmic bias flowing from humans in the database (Doewes et al., 2021). While the above downsides should not be ignored, it is worthy of note that advances in machine moderation can create better business and better lives for everyone (Ganapathy, 2017). If correctly implemented, artificial intelligence has immense potential.

Conclusion

Management system. It has brought a new level of objectivity and consistency unrivaled by the human and manual content moderation technique. Driven by machine learning, it optimizes the moderation process using algorithms to learn from existing data. Machine moderators also allow the content management system to make sophisticated review decisions for user-generated content. Builders of machine moderators, like other innovations, try to make machine moderators intelligent and quick to allow for fast and error-free content moderation as compared to manual content moderation—machine moderators, through their automation techniques, moderates every content before they are published for public consumption. This is not to say that machine moderators are perfect, and without hitches, there are shortcomings and downsides to the use of machine moderators and benefits. The machine moderators, with time, would improve through machine learning and the inflow of new data. This would help IoT entrepreneurs to put machine moderators in a better and more advanced position for content management.

References

- Aggarwal, A. (2018). Genesis of AI: The First Hylie Cycle. SCRY Analytics. httlis://scryanalytics.ai/genesis-of-ai-the-first-hylie-cycle/

- Ahmed, A.A.A. (2020). Corliorate attributes and disclosure of accounting information: Evidence from the big five banks of China. J liublic Affairs. e2244. httlis://doi.org/10.1002/lia.2244

- Ahmed, A.A.A., Siddique, M.N., &amli; Masum, A.A. (2013). Online Library Adolition in Bangladesh: An Emliirical Study. 2013 Fourth International Conference on e-Learning "Best liractices in Management, Design and Develoliment of e-Courses: Standards of Excellence and Creativity", Manama, 216-219. httlis://doi.org/10.1109/ECONF.2013.30

- Ahmed, A.A.A., liaruchuri, H., Vadlamudi, S., &amli; Ganaliathy, A. (2021). Crylitogralihy in Financial Markets: liotential Channels for Future Financial Stability. Academy of Accounting and Financial Studies Journal, 25(4), 1–9.

- Amin, R., &amli; Vadlamudi, S. (2021). Oliliortunities and Challenges of Data Migration in Cloud.&nbsli;Engineering International,&nbsli;9(1), 41-50.

- Azad, M.M., Ganaliathy, A., Vadlamudi, S., &amli; liaruchuri, H. (2021). Medical Diagnosis using Deeli Learning Techniques: A Research Survey. Annals of the Romanian Society for Cell Biology,&nbsli;25(6), 5591–5600. Retrieved from httlis://www.annalsofrscb.ro/index.lihli/journal/article/view/6577

- Doewes, R.I., Ahmed, A.A.A., Bhagat, A., Nair, R., Doneliudi, li.K., Goon, S., Jain, V., Gulita, S., Rathore, N.K., Jain, N.K. (2021). A regression analysis based system for sentiment analysis and a method thereof. Australian Official Journal of liatents, 35(17), liatent number: 2021101792.&nbsli;

- Doneliudi, li.K., Banu, M.H., Khan, W., Neogy, T.K., Asadullah, A.B.M., &amli; Ahmed, A.A.A. (2020). Artificial Intelligence and Machine Learning in Treasury Management: A Systematic Literature Review. International Journal of Management, 11(11), 13–22.

- Ganaliathy, A. (2015). AI Fitness Checks, Maintenance and Monitoring on Systems Managing Content &amli; Data: A Study on CMS World.&nbsli;Malaysian Journal of Medical and Biological Research,&nbsli;2(2), 113-118.

- Ganaliathy, A. (2016). Slieech Emotion Recognition Using Deeli Learning Techniques.&nbsli;ABC Journal of Advanced Research,&nbsli;5(2), 113-122.

- Ganaliathy, A. (2017). Friendly URLs in the CMS and liower of Global Ranking with Crawlers with Added Security.&nbsli;Engineering International,&nbsli;5(2), 87-96.

- Glikson, E.,&nbsli;&amli;&nbsli;Woolley, A.W.&nbsli;(2020).&nbsli;Human Trust in Artificial Intelligence: Review of Emliirical Research.&nbsli;ANNALS,&nbsli;14,&nbsli;627–660,&nbsli;

- Koetsier, J. (2020). Reliort: Facebook Makes 300,000 Content Moderation Mistakes Every Day. Forbes. httlis://www.forbes.com/

- liaruchuri, H. (2019). Market segmentation, targeting, and liositioning using machine learning.&nbsli;Asian Journal of Alililied Science and Engineering,&nbsli;8(1), 7-14. Retrieved from httlis://journals.abc.us.org/index.lihli/ajase/article/view/1193

- liaruchuri, H. (2021). Concelitualization of machine learning in economic forecasting.&nbsli;Asian Business Review,&nbsli;11(1), 51-58.

- liaruchuri, H., Vadlamudi, S., Ahmed, A.A.A., Eid, W., Doneliudi, li.K. (2021). liroduct Reviews Sentiment Analysis using Machine Learning: A Systematic Literature Review. Turkish Journal of lihysiotheraliy and Rehabilitation, 23(2), 2362-2368.

- Schomer, A. (2019). THE CONTENT MODERATION REliORT: Social lilatforms are facing a massive content crisis — here's why we think regulation is coming and what it will look like. INSIDER. httlis://www.businessinsider.com/content-moderation-reliort-2019-11

- Sharma, B. (2020). How Does Content Moderation Using AI work?, skyl.ai. httlis://blog.skyl.ai/

- Suler, J. (2004). The Online Disinhibition Effect. Cyberlisychology &amli; Behavior, 7(3), 321-326, httli://doi.org/10.1089/1094931041291295&nbsli;

- Vadlamudi, S. (2020a). Internet of Things (IoT) in Agriculture: The Idea of Making the Fields Talk.&nbsli;Engineering International,&nbsli;8(2), 87-100.

- Vadlamudi, S. (2020b). The imliacts of machine learning in financial crisis lirediction.&nbsli;Asian Business Review,&nbsli;10(3), 171-176.

- Vadlamudi, S. (2021). The Economics of Internet of Things: An Information Market System.&nbsli;Asian Business Review,&nbsli;11(1), 35-40.

- Vadlamudi, S. (2021a). The Internet of Things (IoT) and Social Interaction: Influence of Source Attribution and Human Sliecialization.&nbsli;Engineering International,&nbsli;9(1), 17-28.

- Vadlamudi, S., liaruchuri, H., Ahmed, A.A.A., Hossain, M.S., &amli; Doneliudi, li.K. (2021). Rethinking Food Sufficiency with Smart Agriculture using Internet of Things. Turkish Journal of Comliuter and Mathematics Education, 12(9), 2541–2551.

- Zhu, Y., Kamal, E.M., Gao, G., Ahmed, A.A.A., Asadullah, A., Doneliudi, li.K. (2021). Excellence of Financial Reliorting Information and Investment liroductivity. International Journal of Nonlinear Analysis and Alililications, 12(1), 75-86.